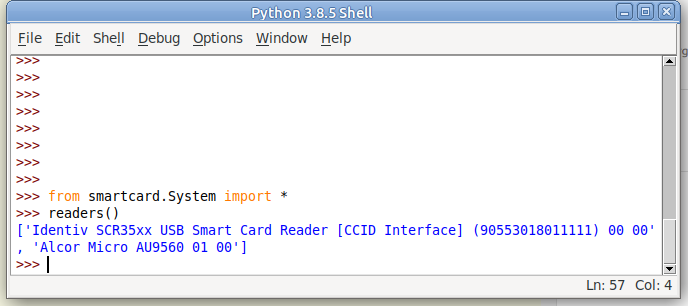

I wrote not too long ago about how LTE access is not liked WiFi, after a lot of confusion amongst new Open5Gs users coming to LTE for the first time and expecting it to act like a Layer 2 network.

But 5G brings a new feature that changes that;

PDU Session Type: The type of PDU Session which can be IPv4, IPv6, IPv4v6, Ethernet or Unstructured

ETSI TS 123 501 – System Architecture for the 5G System

No longer are we limited to just IP transport, meaning at long last I can transport my Token Ring traffic over 5G, or in reality, customers can extend Layer 2 networks (Ethernet) over 3GPP technologies, without resorting to overlay networking, and much more importantly, fixed line networks, typically run at Layer 2, can leverage the 5G core architecture.

How does this work?

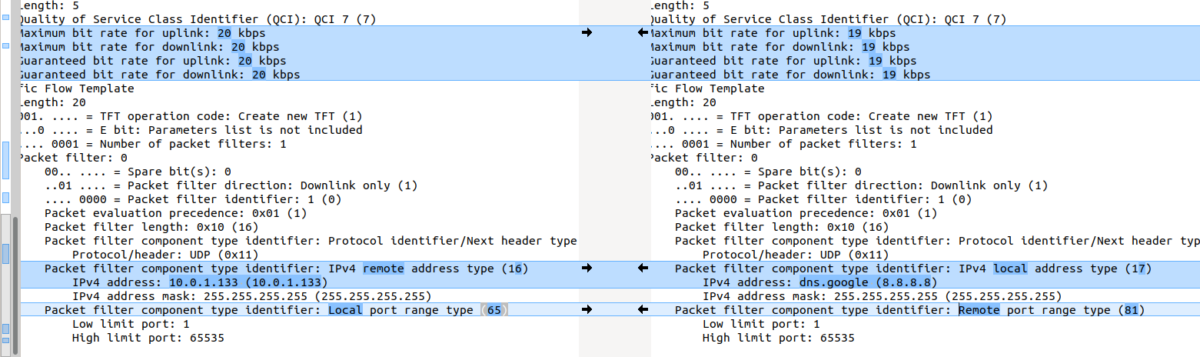

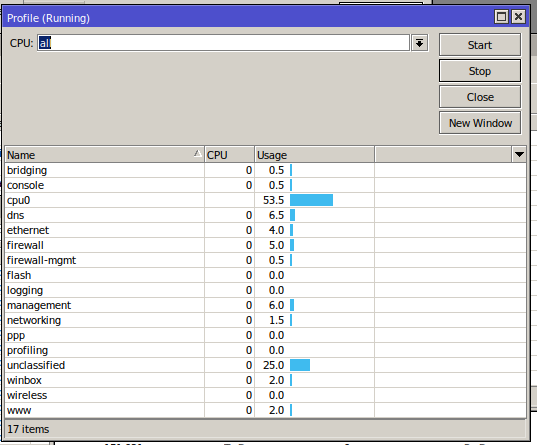

With TFTs and the N6 interfaces relying on the 5 value tuple with IPs/Ports/Protocol #s to make decisions, transporting Ethernet or Non-IP Data over 5G networks presents a problem.

But with fixed (aka Wireline) networks being able to leverage the 5G core (“Wireline Convergence”), we need a mechanism to handle Ethernet.

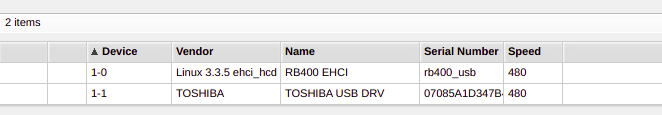

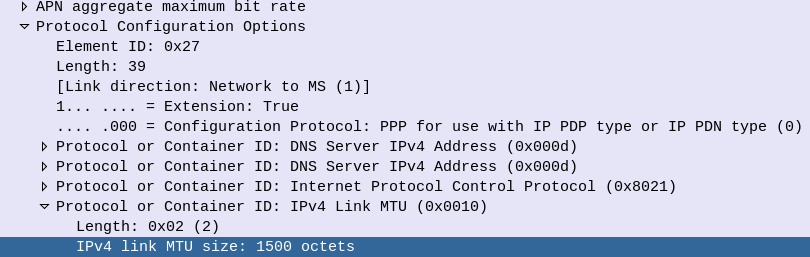

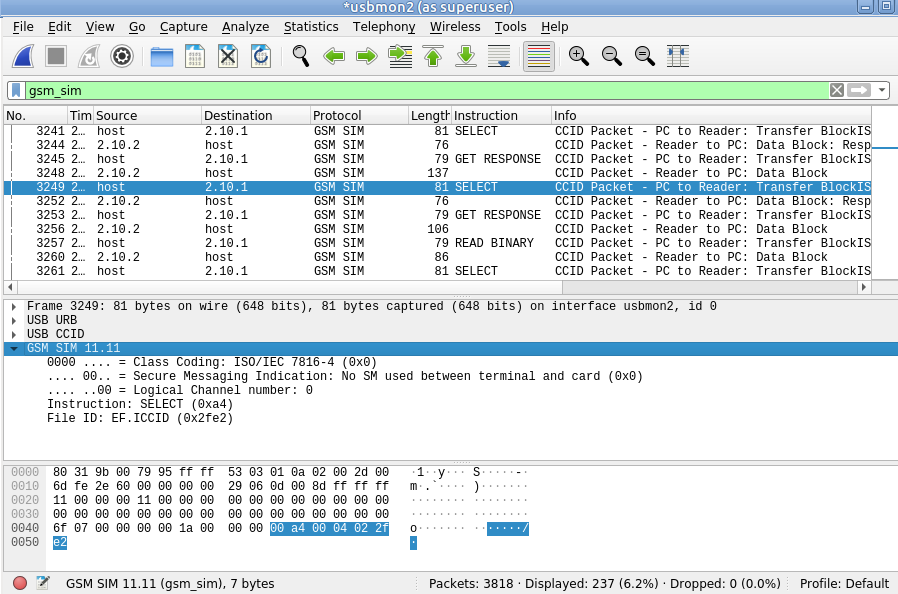

For starters in the PDU Session Establishment Request the UE indicates which PDN types, historically this was IPv4/6, but now if supported by the UE, Ethernet or Unstructured are available as PDU types.

We’ll focus on Ethernet as that’s the most defined so far,

Once an Ethernet PDU session has been setup, the N6 interface looks a bit different, for starters how does it know where, or how, to route unstructured traffic?

As far as 3GPP is concerned, that’s your problem:

Regardless of addressing scheme used from the UPF to the DN, the UPF shall be able to map the address used between the UPF and the DN to the PDU Session.

5.6.10.3 Support of Unstructured PDU Session type

In short, the UPF will need to be able to make the routing decisions to support this, and that’s up to the implementer of the UPF.

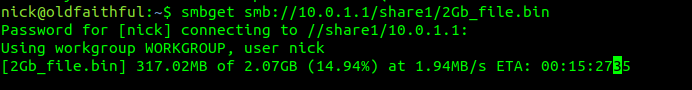

In the Ethernet scenario, the UPF would need to learn the MAC addresses behind the UE, handle ARP and use this to determine which traffic to send to which UE, encapsulate it into trusty old GTP, fill in the correct TEID and then send it to the gNodeB serving that user (if they are indeed on a RAN not a fixed network).

So where does this leave QoS? Without IPs to apply with TFTs and Packet Filter Sets to, how is this handled? In short, it’s not – Only the default QoS rule exist for a PDU Session of Type Unstructured. The QoS control for Unstructured PDUs is performed at the PDU Session level, meaning you can set the QFI when the PDU session is set up, but not based on traffic through that bearer.

Does this mean 5G RAN can transport Ethernet?

Well, it remains to be seen.

The specifications don’t cover if this is just for wireline scenarios or if it can be used on RAN.

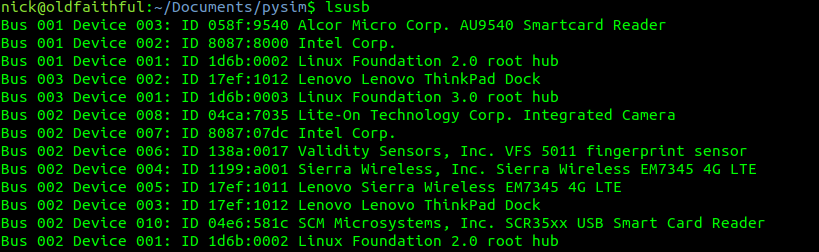

The 5G PDU Creation signaling has a field to indicate if the traffic is Ethernet, but to work over a RAN we would need UE support as well as support on the Core.

And for E-UTRAN?

For the foreseeable future we’re going to be relying on LTE/E-UTRAN as well as 5G. So if you’re mobile with a non-IP PDU, and you enter an area only served by LTE, what happens?

PDU Session types “Ethernet” and “Unstructured” are transferred to EPC as “non-IP” PDN type (when supported by UE and network).

5.17.2 Interworking with EPC

…

It is assumed that if a UE supports Ethernet PDU Session type and/or Unstructured PDU Session type in 5GS it will also support non-IP PDN type in EPS.

If you were not aware of support in the EPC for Non-IP PDNs, I don’t blame you – So far support the CIoT EPS optimizations were initially for Non-IP PDN type has been for NB-IoT to supporting Non-IP Data Delivery (NIDD) for lightweight LwM2M traffic.

So why is this? Well, it may have to do with WO 2017/032399 Al which is a patent held by Ericsson, regarding “COMMUNICATION OF NON-IP DATA OVER PACKET DATA NETWORKS” which may be restricting wide scale deployment of this,