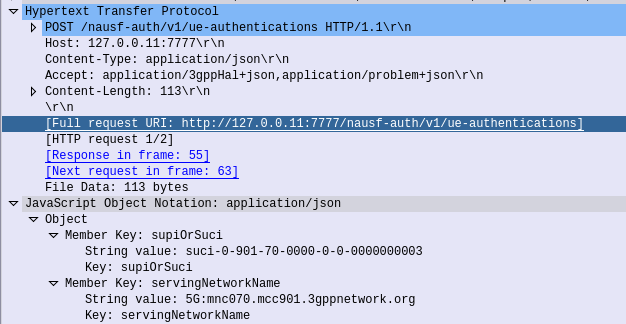

When a UE enters Idle mode, the network releases radio resources and the UE enters power saving mode.

When the UE wants to send data (Uplink) the UE just tells the network “hey I want to send something” and away it goes, nice and simple.

But when the network wants to send data to the UE (Downlink) then the UPF needs a method to tell the Control Plane (SGW-C or SMF) that there’s data waiting and to go and page the UE.

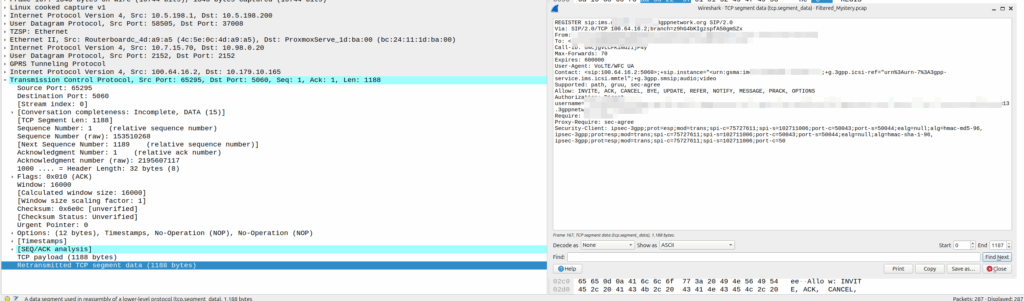

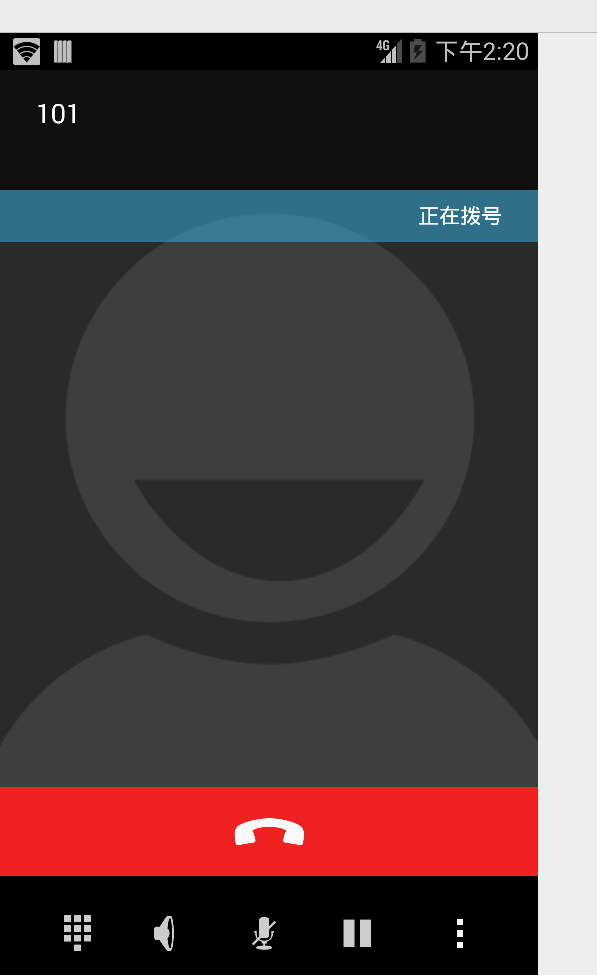

A prime example of this is when you’ve got a Mobile Terminated VoLTE call coming in, you need a way to tell the UE to wake up out of Idle mode because you’ve got something to send to it (a SIP INVITE).

But in order for this to work, we can’t just say “Hey I’ve got some packets for you” and let them get dropped, the UPF also needs to buffer (store temporarily) the downlink packets for the UE until the UE comes out of Idle mode, and then flush them out to deliver them to the UE.

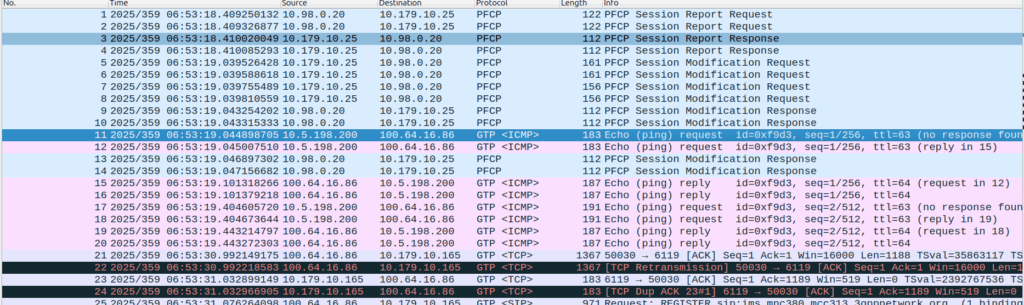

So let’s look at the flow.

Enabling Buffering (Idle Mode)

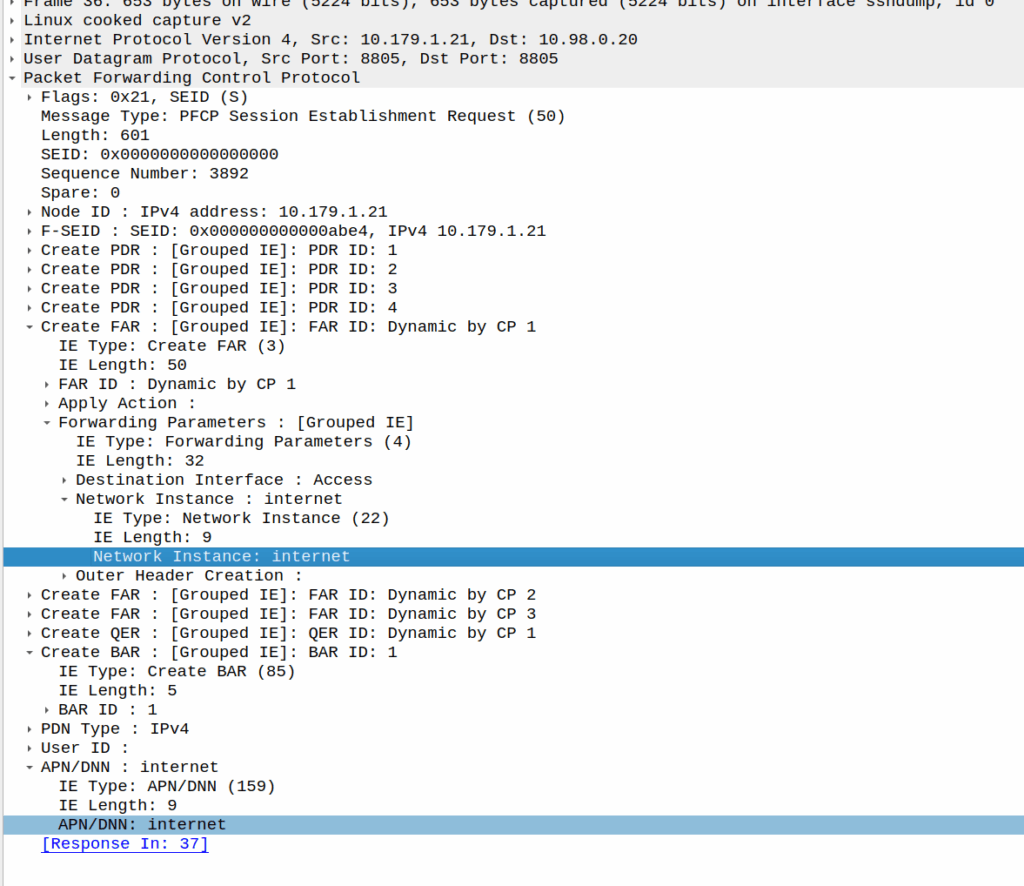

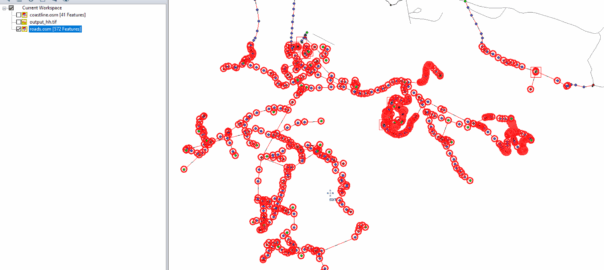

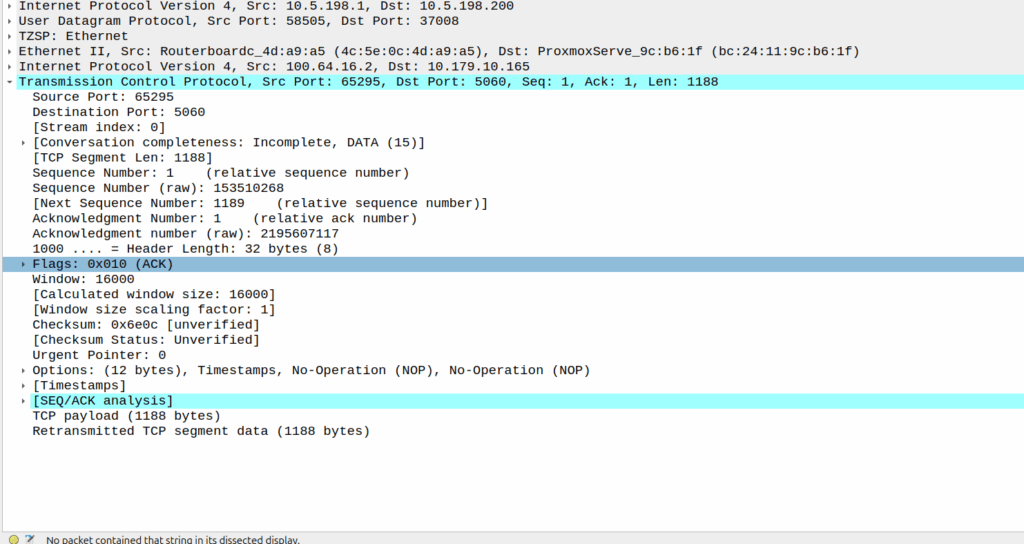

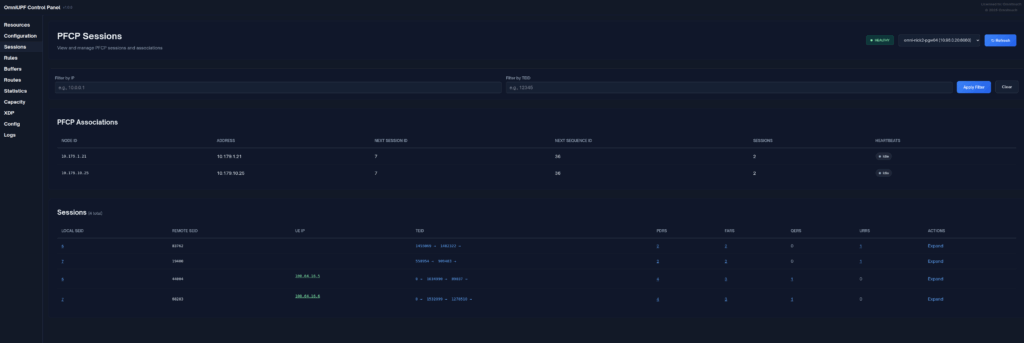

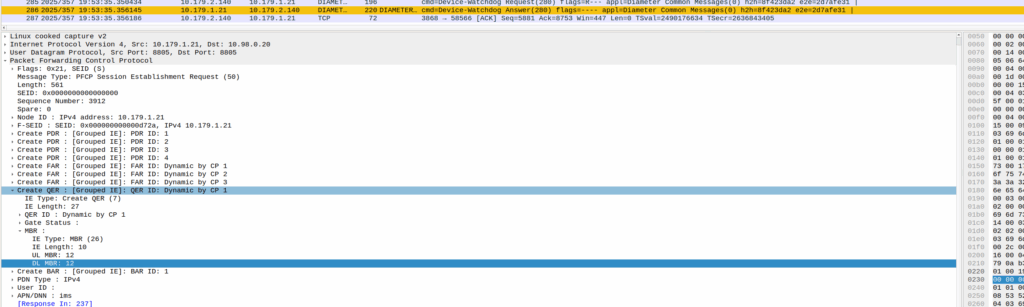

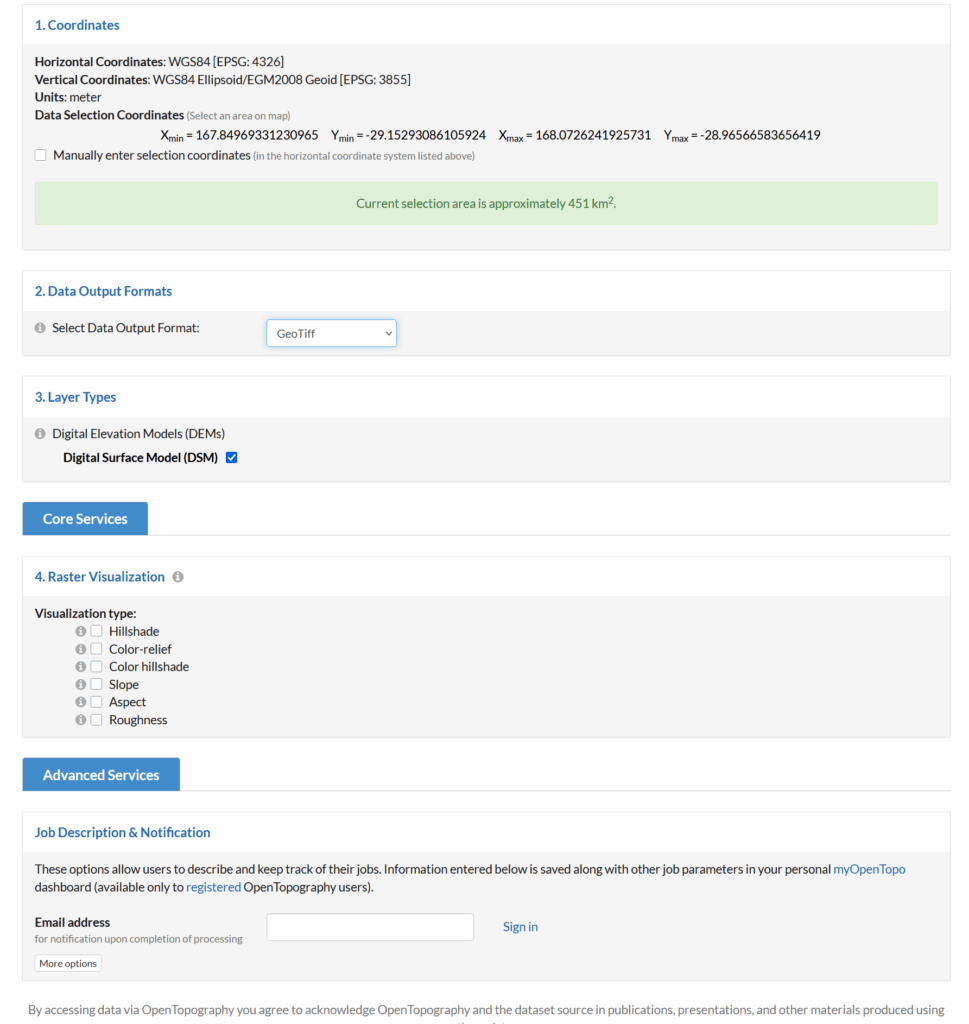

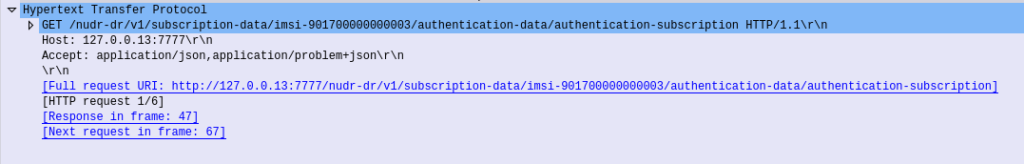

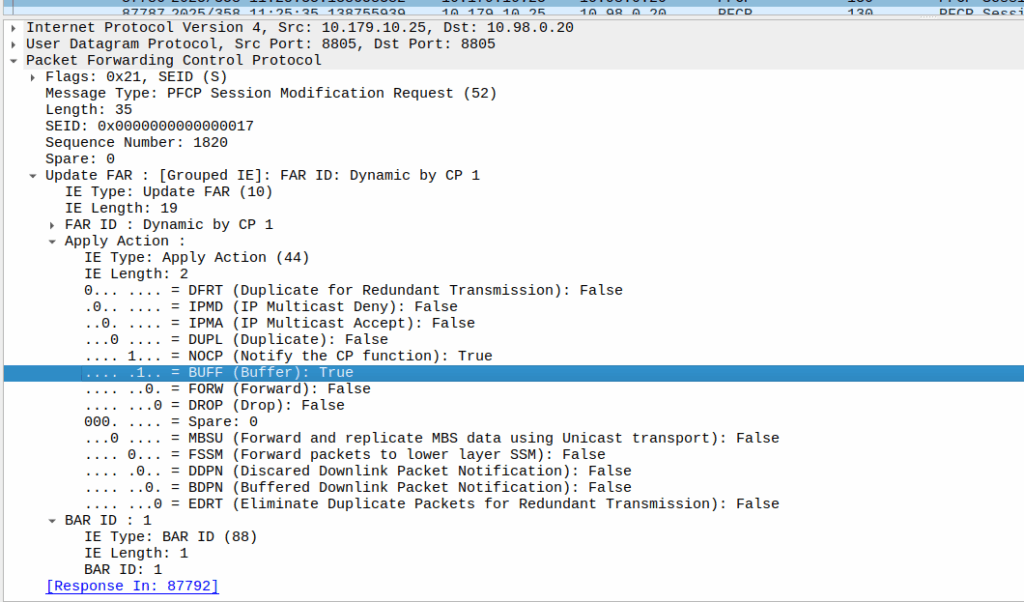

When the sub enters idle mode, the Control Plane (SGW-C for an EPC or SMF for a 5GC) it sends a Session Modification Request but with the BUFF (Buffer) and NOCP (Notify Control Plane) flags set, and FORW (Forward) turned off.

What this means is now for packets to that bearer, the UPF must:

- Not forward any traffic

- Buffer the traffic

- Notify the control plane when the first packet comes in that we buffer

Then the UPF just sits and waits for any incoming packets.

The Notify

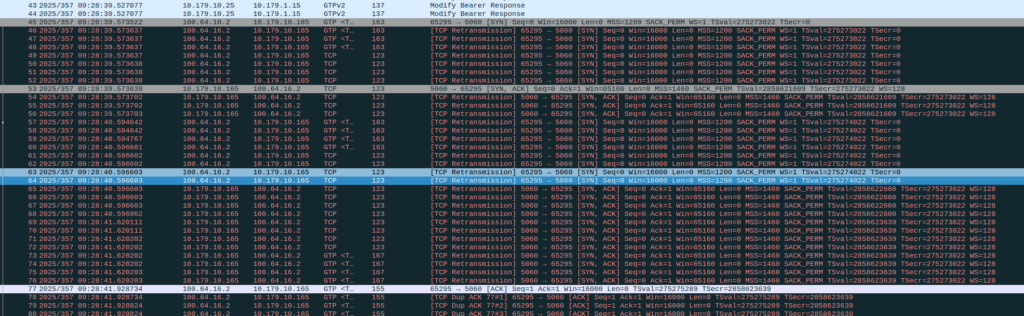

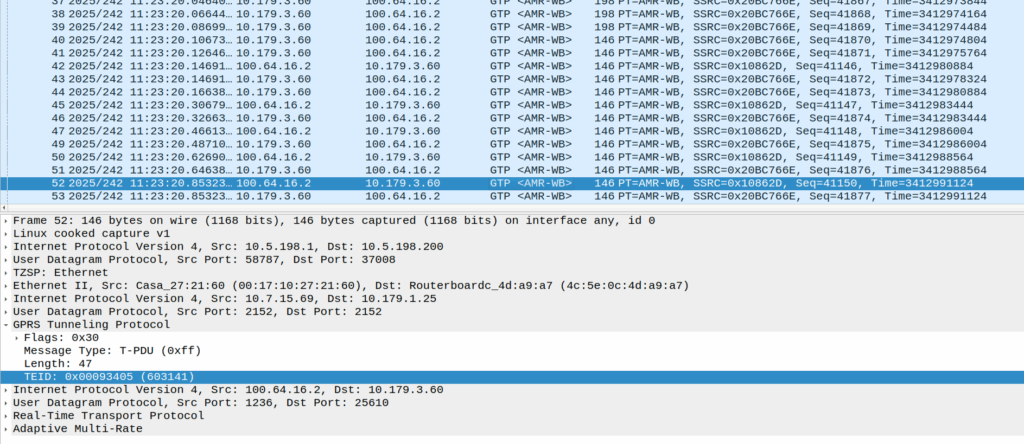

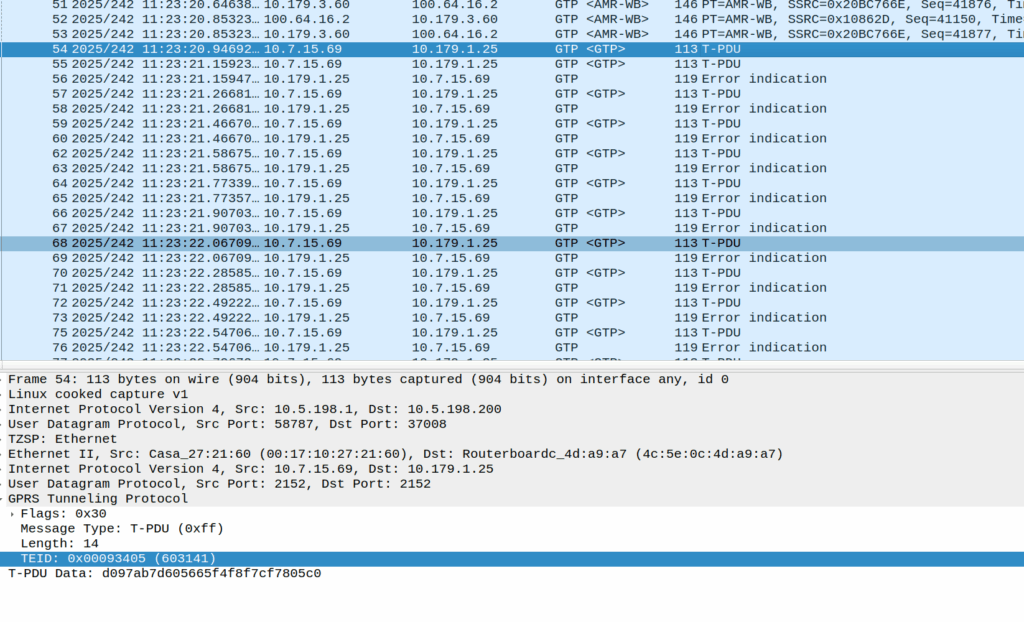

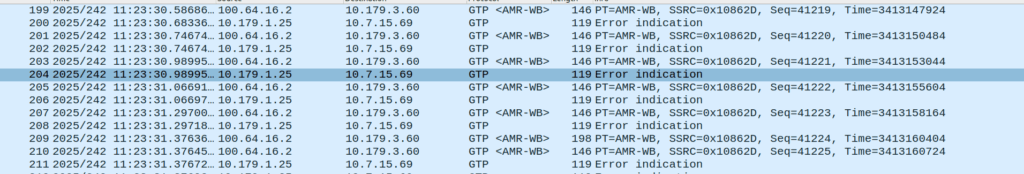

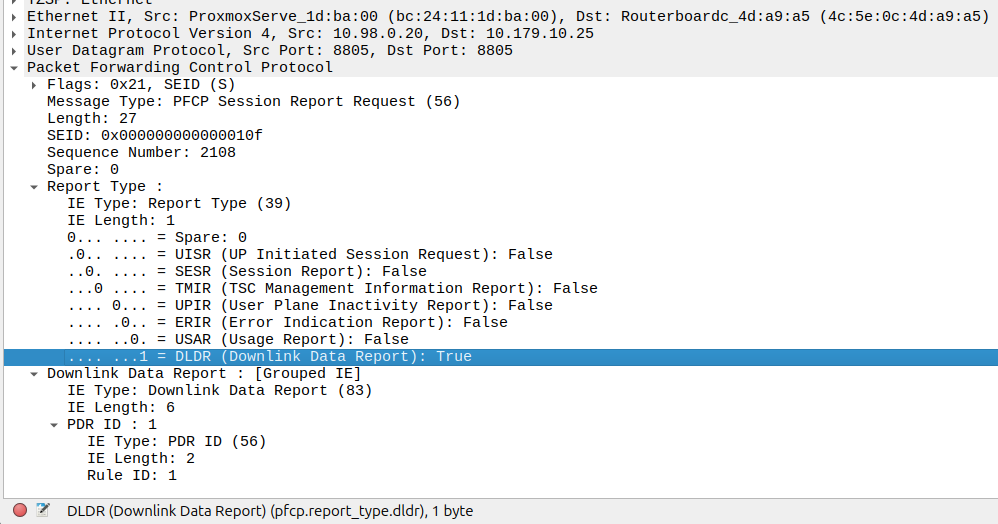

When the UE gets an incoming packet that it’s supposed to buffer and notify, well, it does just that.

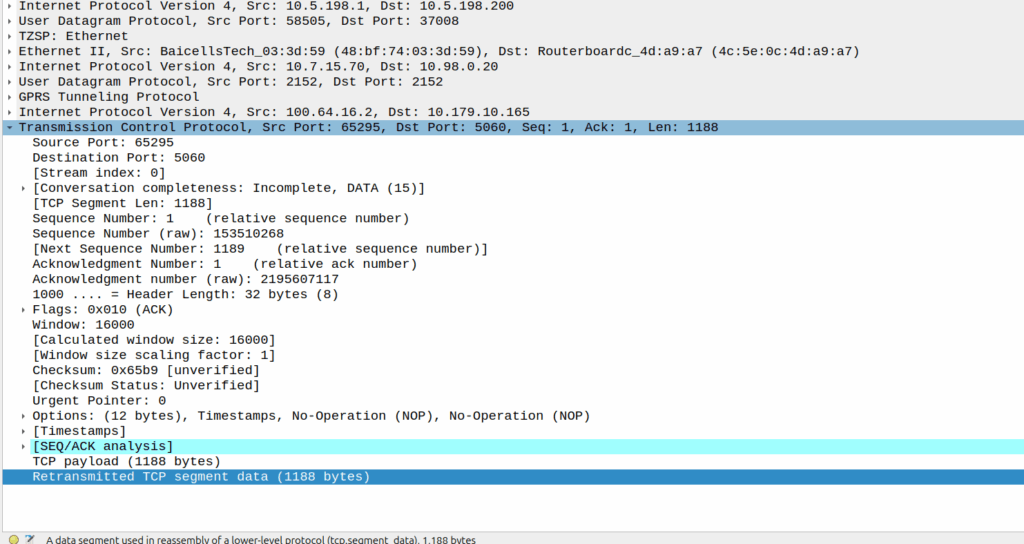

The packets are copied into a buffer, in sequence, and for the first packet, the UPF must send a notification to the Control Plane.

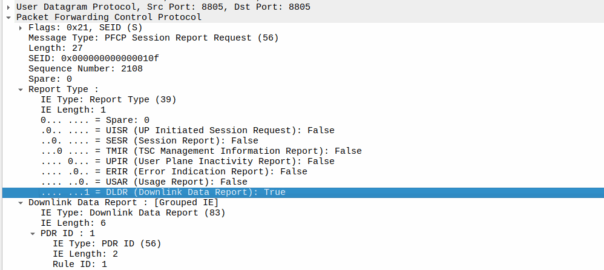

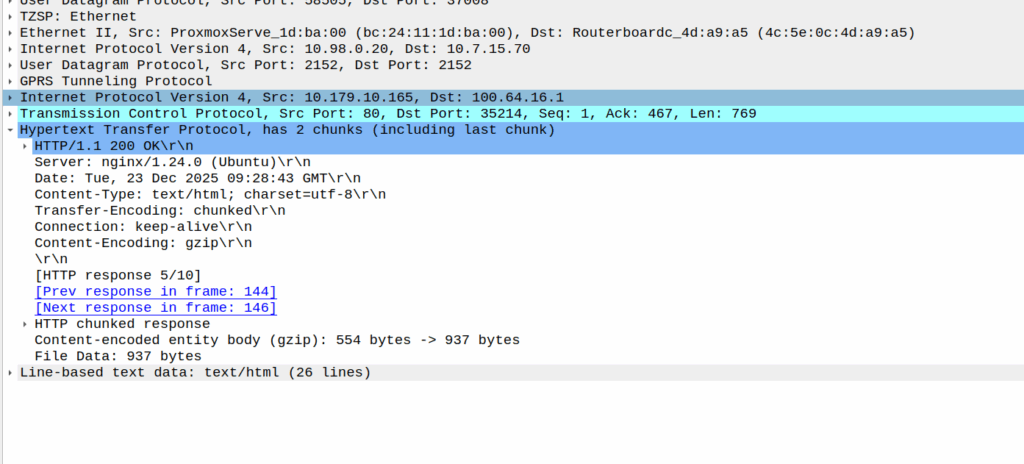

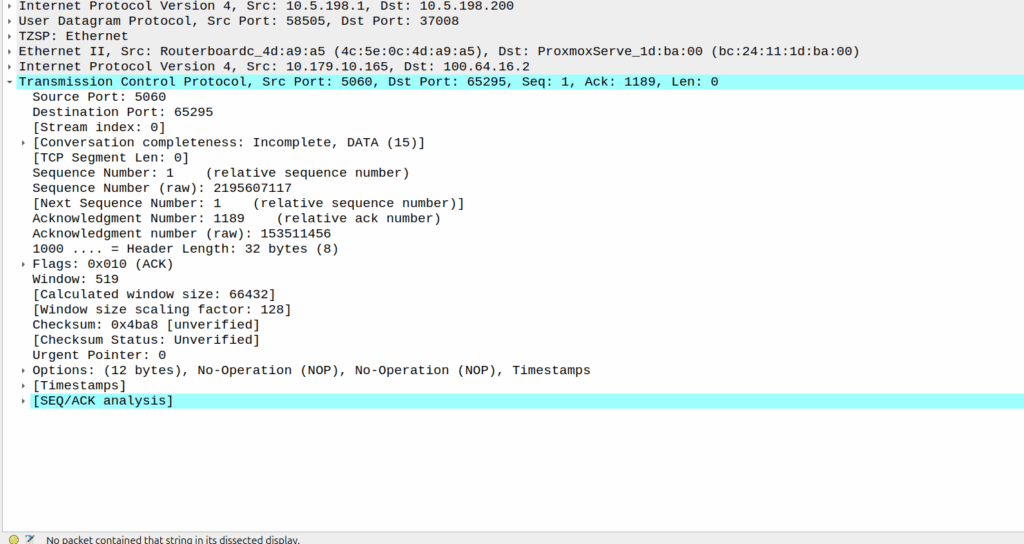

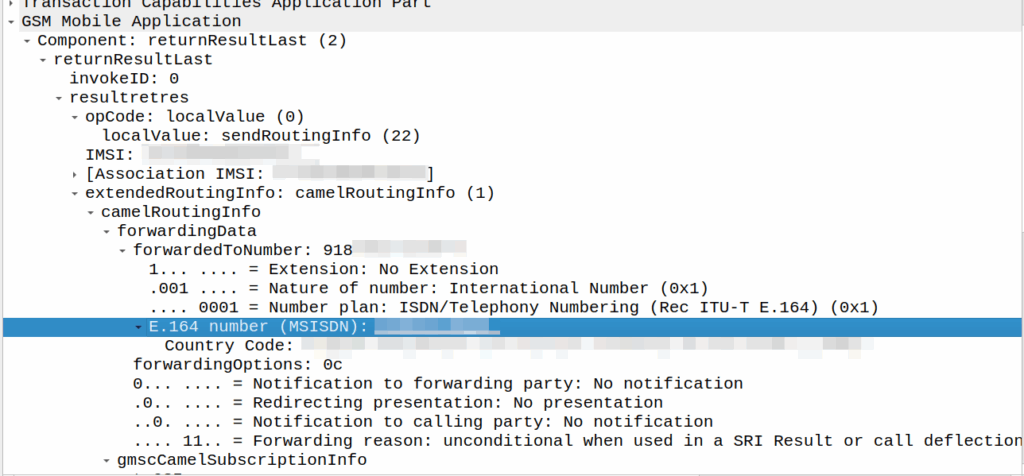

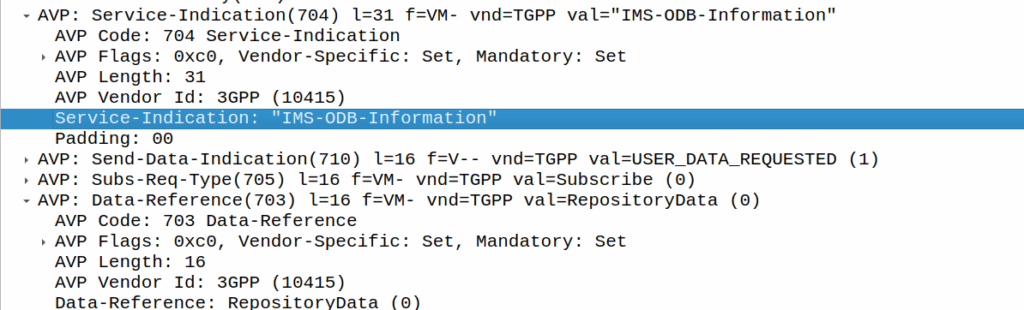

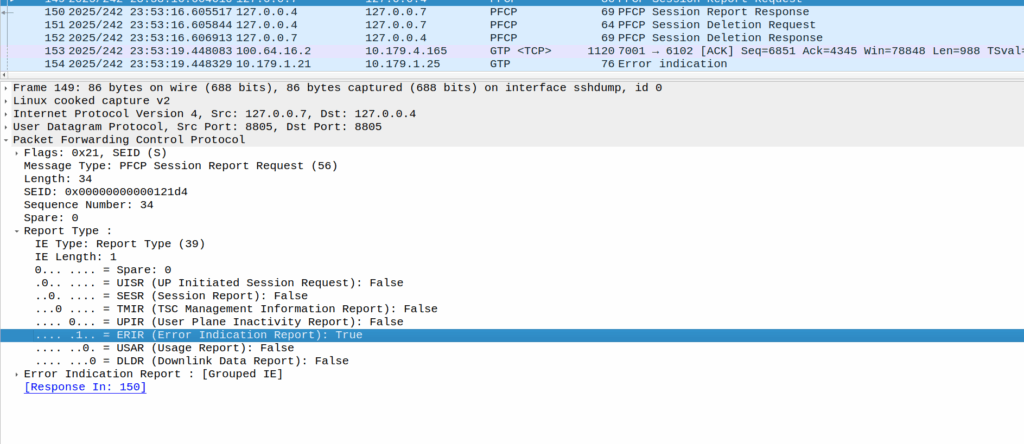

That looks like this, it’s just a Session Report Request with the Dowlink Data Report flag.

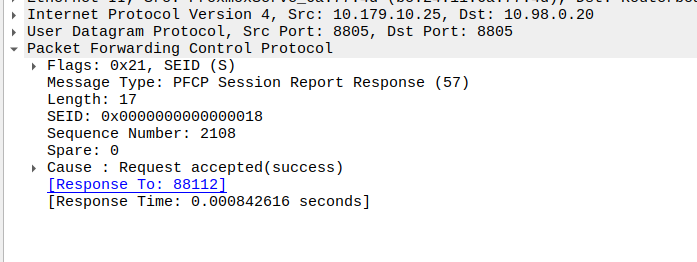

Now the SMF/SGW-U sends back a Session Report Response and starts the process of paging the UE.

At the same time the UPF keeps buffering – It’s work is not done.

Flushing and Forwarding

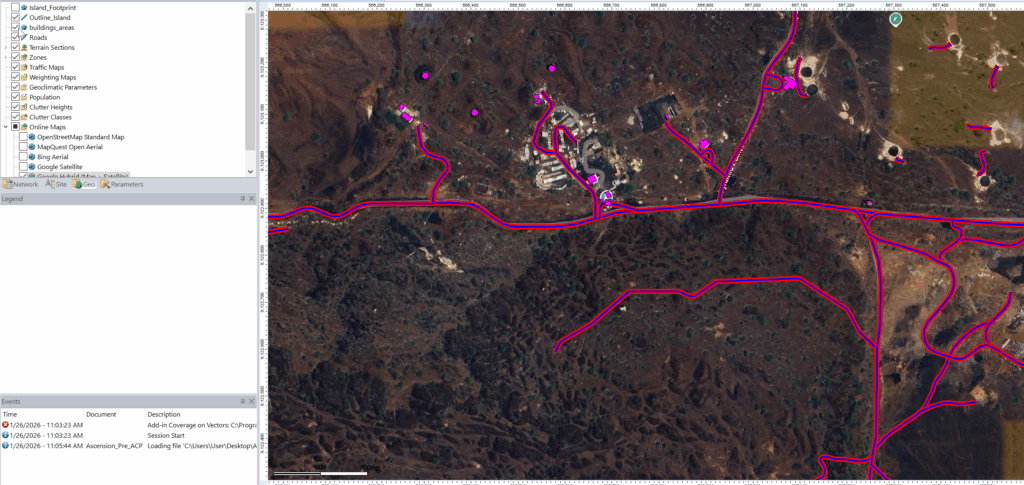

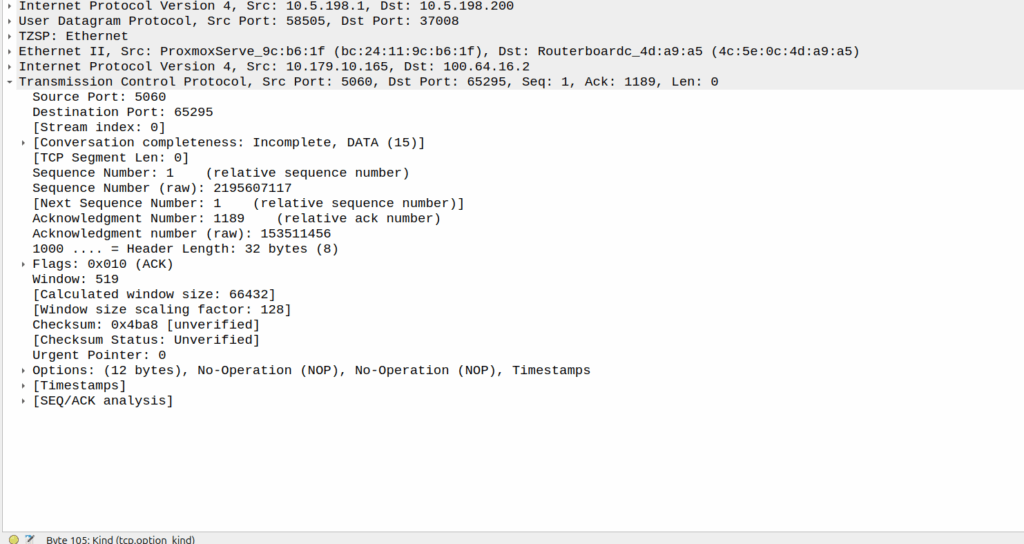

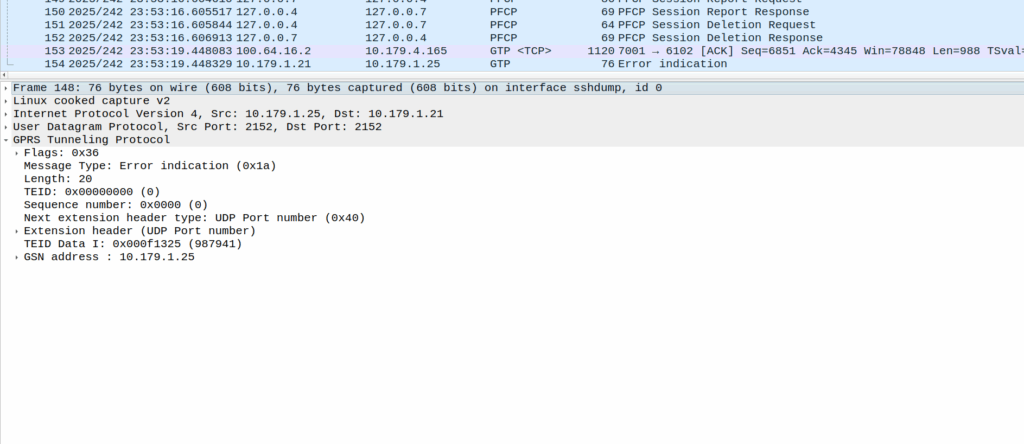

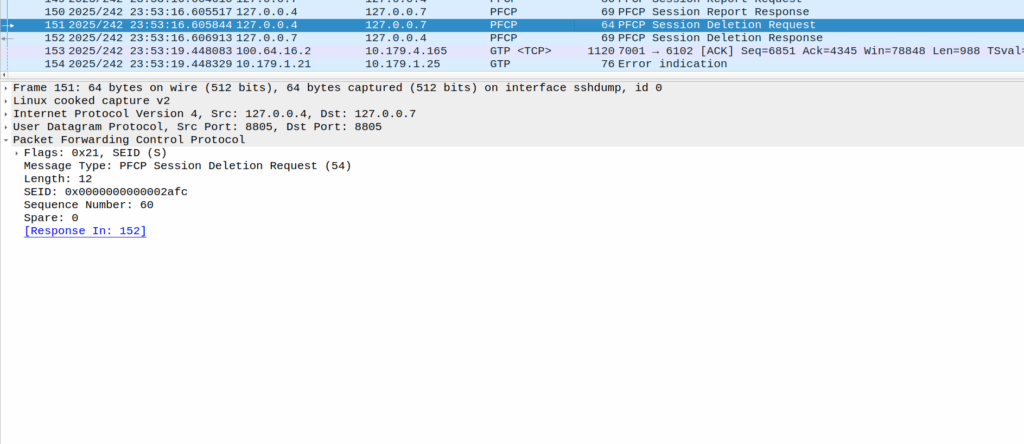

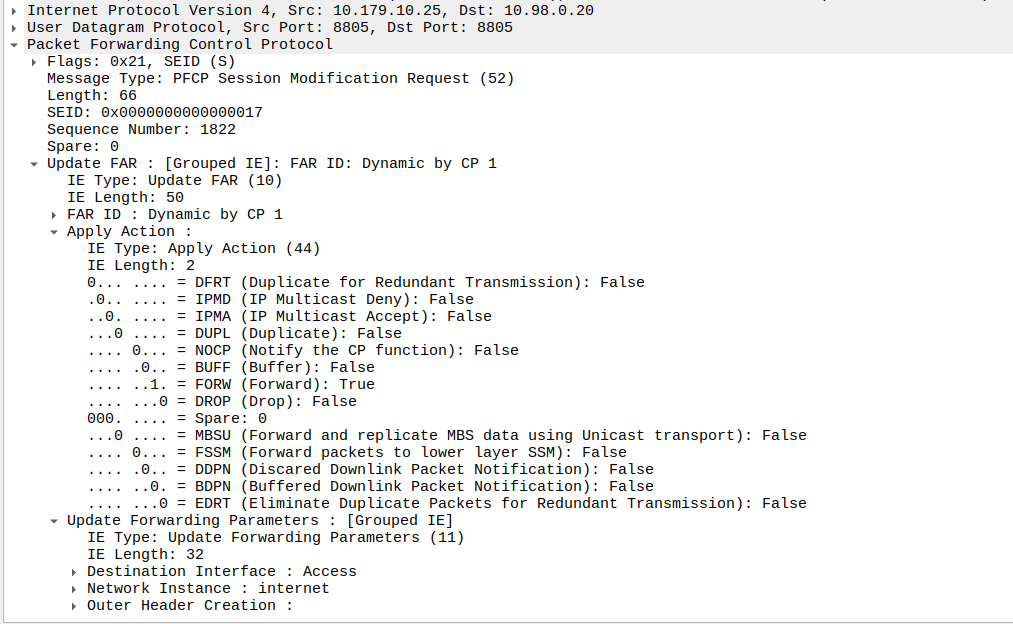

Once the UE has become reachable, the Control Panel needs to modify the bearer to turn back on forwarding. It does through another Session Modification Request, this is the inverse of the one it sent to turn on buffering, as we’re turning off buffering and notifications, and turning on forwarding.

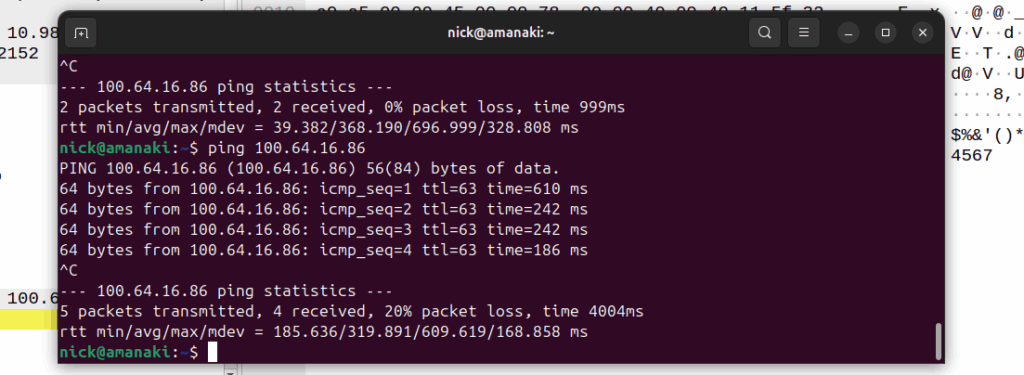

Now the UPF flushes it’s buffer – It’ll send all the packets that were queued up out over the wire towards the gNB / eNodeB, so the SIP INVITE for the MT call or whatever will make it through.

One thing to note is that the packets that get buffered are going to take some time to get delivered, as the NOTIFY / page UE / reconnect UE / Session Modification Request (to enable forwarding again) needs to happen before the buffers are flushed and delivered.

And that’s pretty much it, the UPF has now flushed it’s buffers and moves back to forwarding actions.