I’ve been working for some time on open source mobile network cores, and one feature that has been a real struggle for a lot of people (Myself included) is getting VoLTE / IMS working.

Here’s some of the issues I’ve faced, and the lessons I learned along the way,

Sadly on most UEs / handsets, there’s no “Make VoLTE work now” switch, you’ve got a satisfy a bunch of dependencies in the OS before the baseband will start sending SIP anywhere.

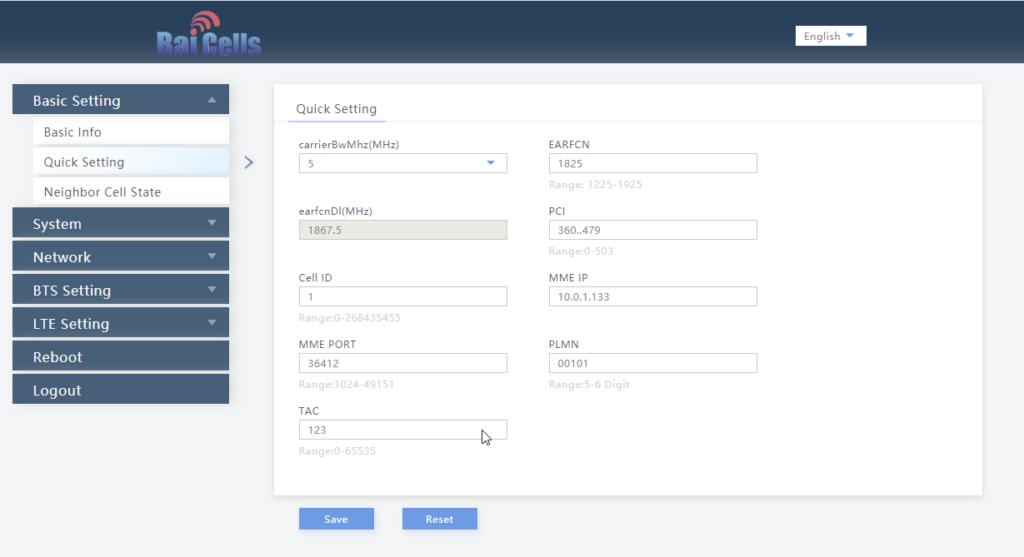

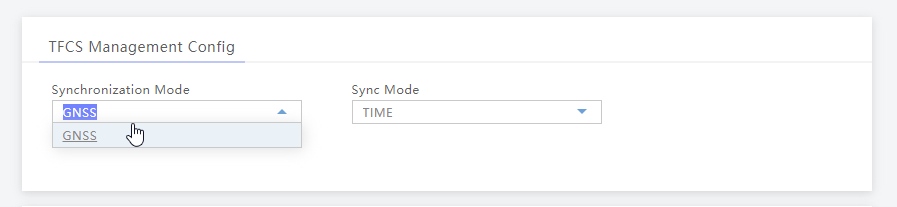

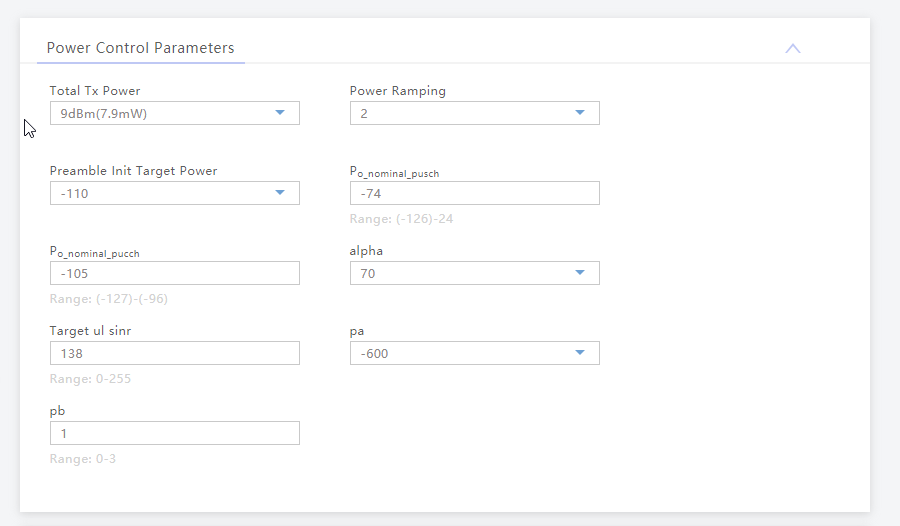

Get the right Hardware

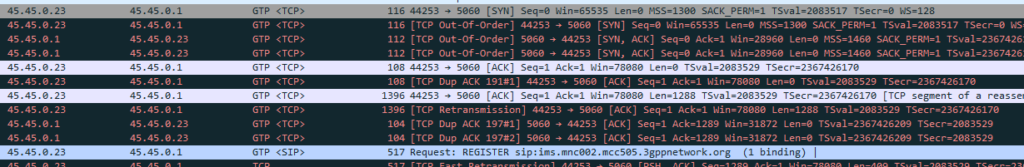

Your eNB must support additional bearers (dedicated bearers I’ve managed to get away without in my testing) so the device can setup an APN for the IMS traffic.

Sadly at the moment this rules our Software Defined eNodeBs, like srsENB.

In the end I opted for a commercial eNB which has support for dedicated bearers.

ISIM – When you thought you understood USIMs – Guess again

According to the 3GPP IMS docs, an ISIM (IMS SIM) is not a requirement for IMS to work.

However in my testing I found Android didn’t have the option to enable VoLTE unless an ISIM was present the first time.

In a weird quirk I found once I’d inserted an ISIM and connected to the VoLTE network, I could put a USIM in the UE and also connect to the VoLTE network.

Obviously the parameters you can set on the USIM, such as Domain, IMPU, IMPI & AD, are kind of “guessed” but the AKAv1-MD5 algorithm does run.

Getting the APN Config Right

There’s a lot of things you’ll need to have correct on your UE before it’ll even start to think about sending SIP messaging.

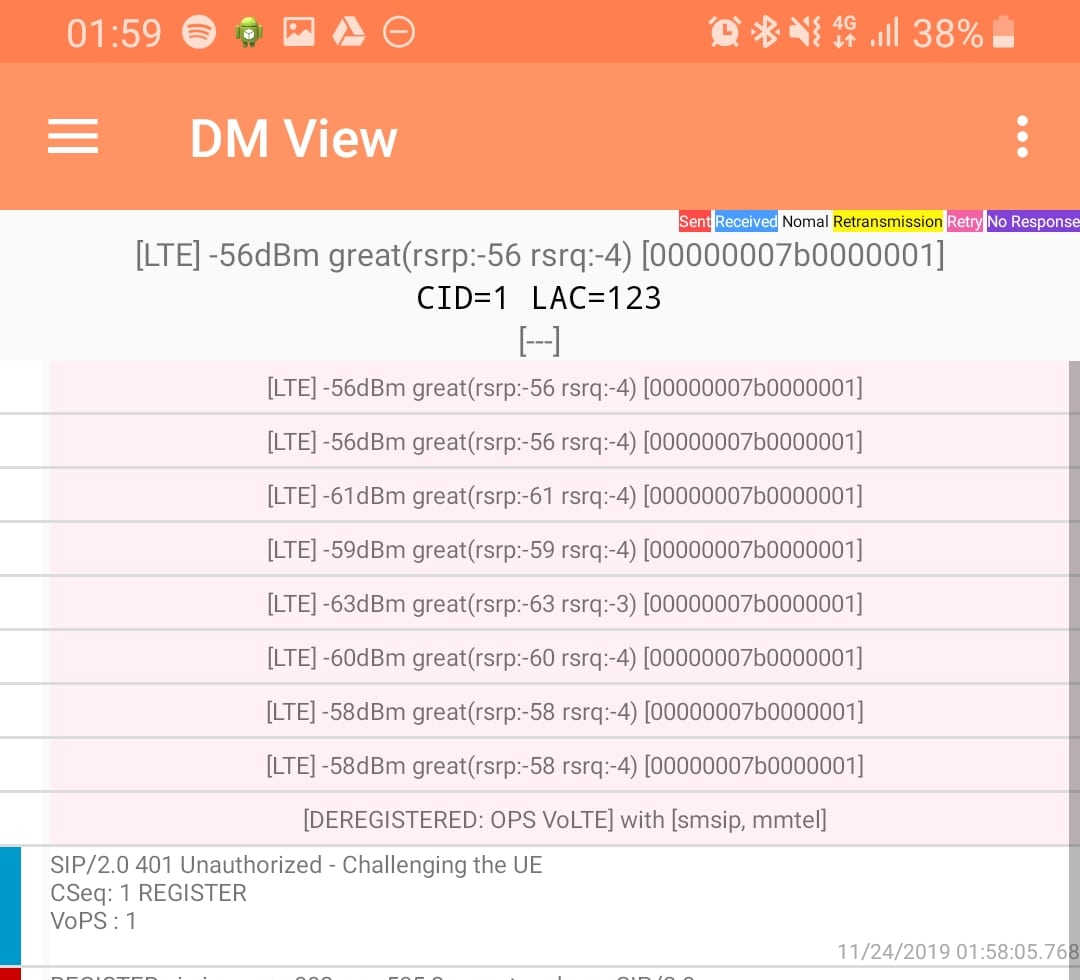

I was using commercial UE (Samsung handsets) without engineering firmware so I had very limited info on what’s going on “under the hood”. There’s no “Make VoLTE do” tickbox, there’s VoLTE enable, but that won’t do anything by default.

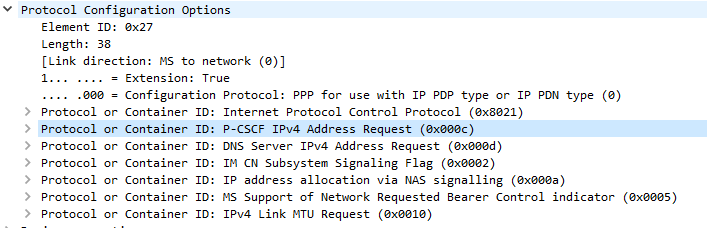

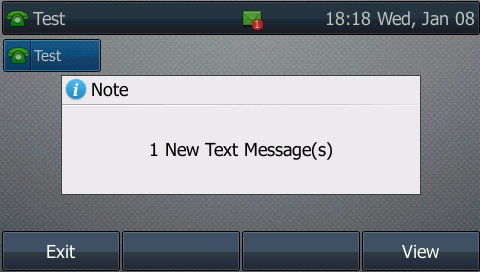

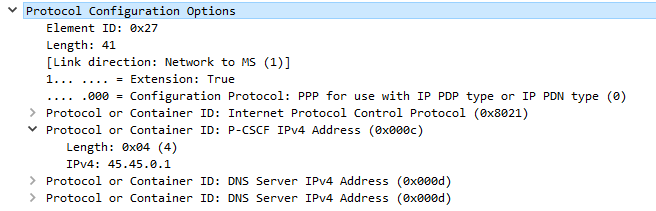

In the end I found adding a new APN called ims with type ims and enabling VoLTE in the settings finally saw the UE setup an IMS dedicated bearer, and request the P-CSCF address in the Protocol Configuration Options.

Get the P-GW your P-CSCF Address

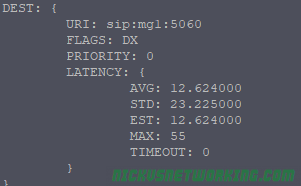

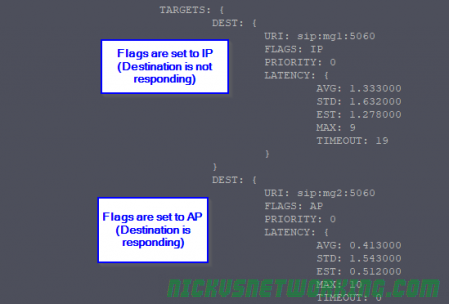

If your P-GW doesn’t know the IP of your P-CSCF, it’s not going to be able to respond to it in the Protocol Configuration Options (PCO) request sent by the UE with that nice new bearer for IMS we just setup.

There’s no way around Mutual Authentication

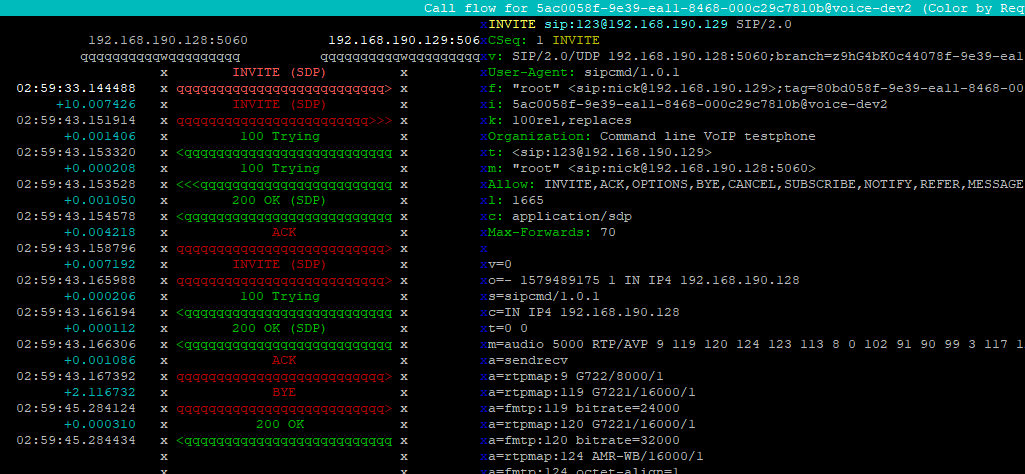

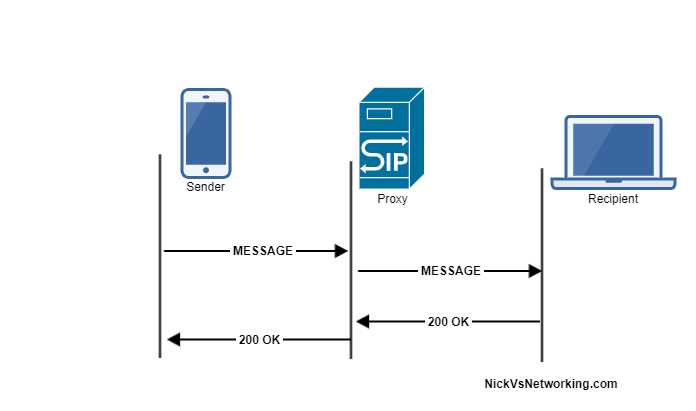

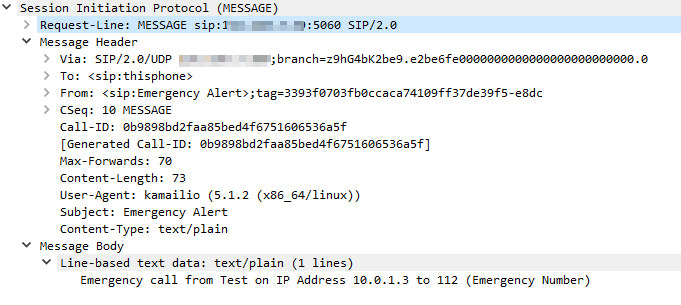

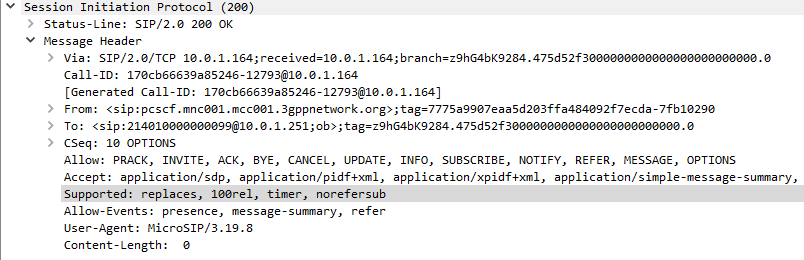

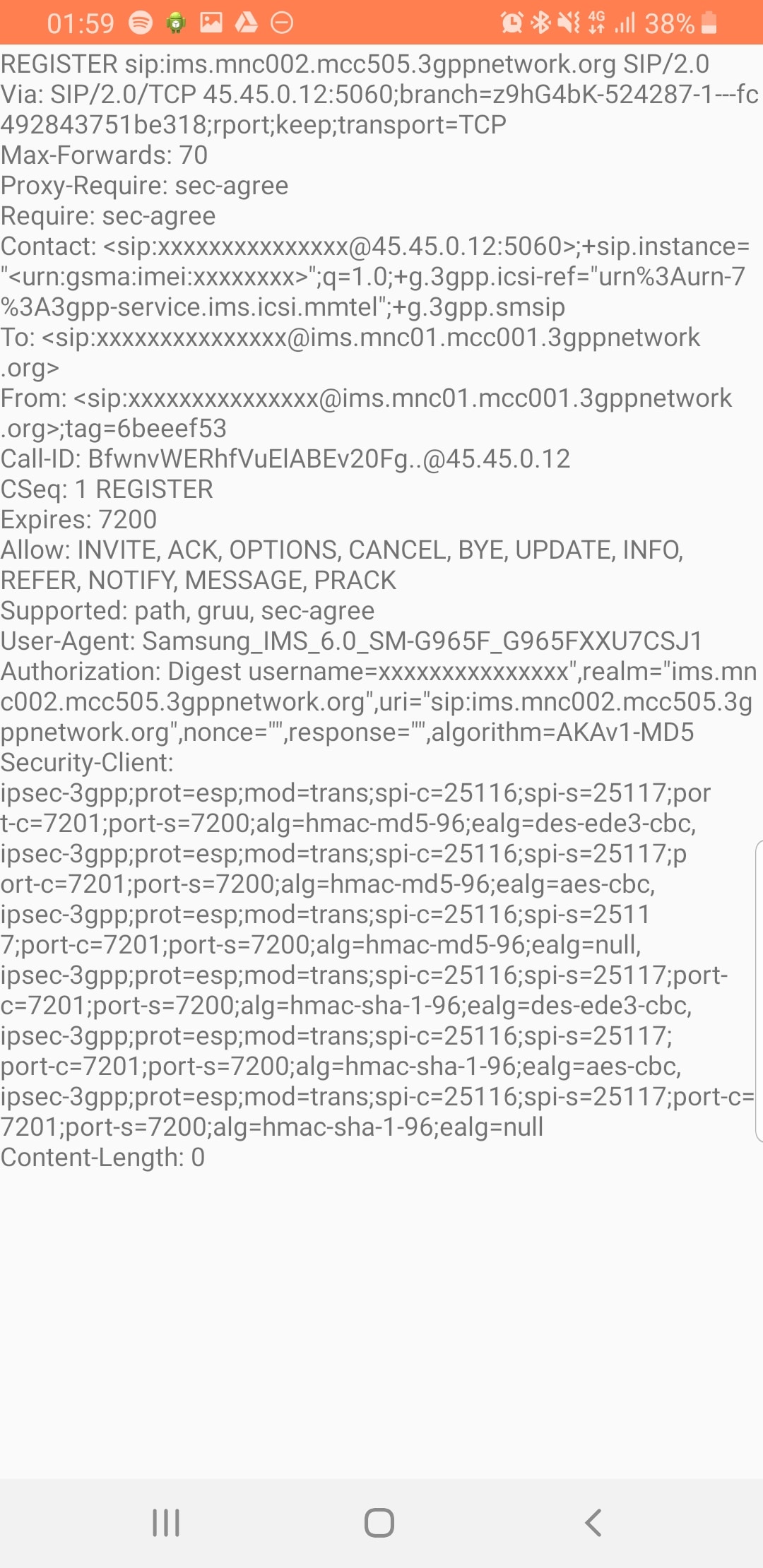

Coming from a voice background, and pretty much having RFC 3261 tattooed on my brain, when I finally got the SIP REGISTER request sent to the Proxy CSCF I knocked something up in Kamailio to send back a 200 OK, thinking that’d be the end of it.

For any other SIP endpoint this would have been fine, but IMS Clients, nope.

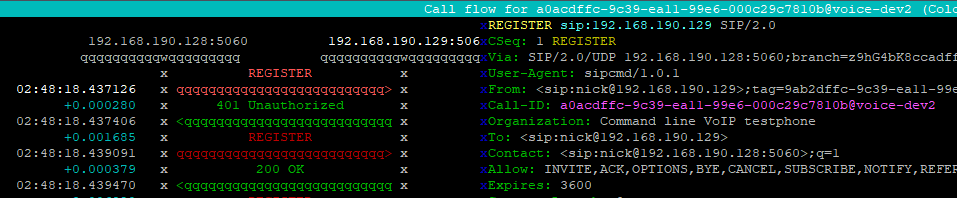

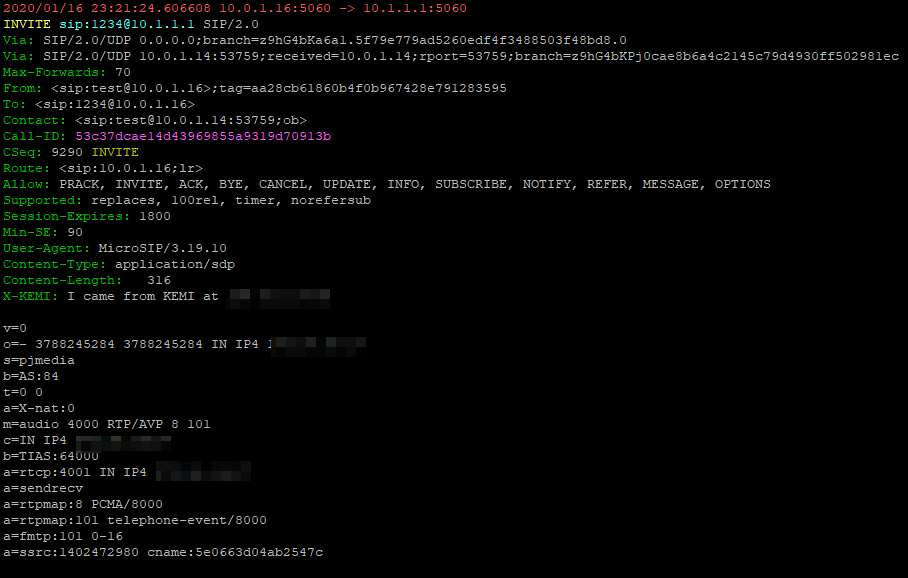

Reading the specs drove home the same lesson anyone attempting to setup their own LTE network quickly learns – Mutual authentication means both the network and the UE need to verify each other, while I (as the network) can say the UE is OK, the UE needs to check I’m on the level.

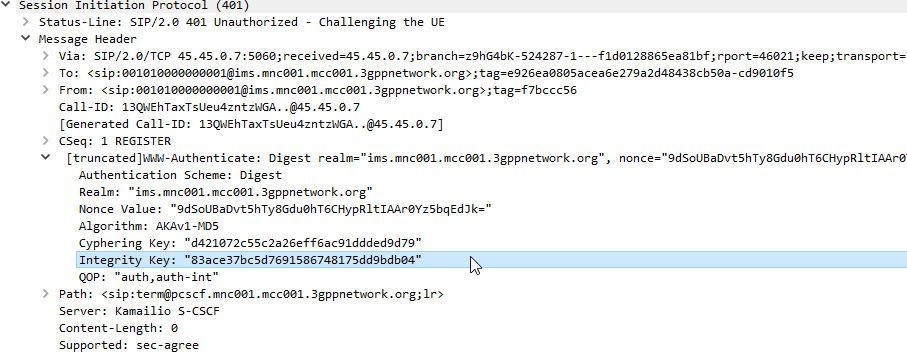

In the end I added Multimedia Authentication support to PyHSS, and responded with a Crypto challenge using the AKAv1-MD5 auth,

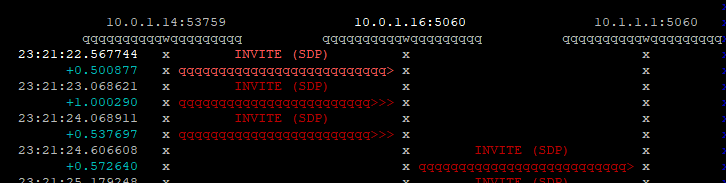

I saw my 401 response go back to the UE and then no response. Nada.

This led to my next lesson…

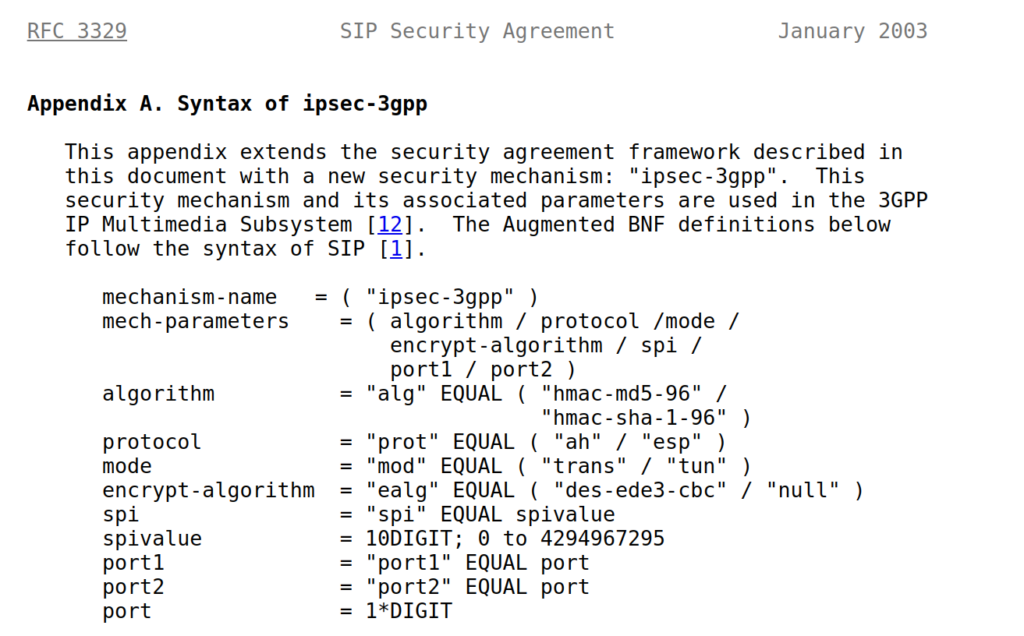

There’s no way around IPsec

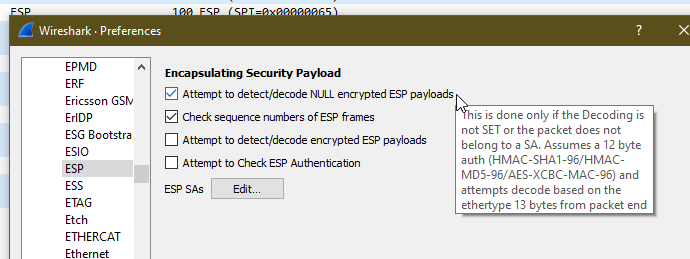

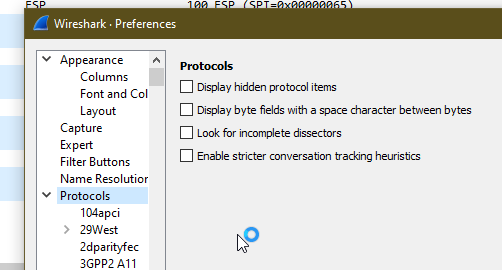

According to the 3GPP docs, support for IPsec is optional, but I found this not to be the case on the handsets I’ve tested.

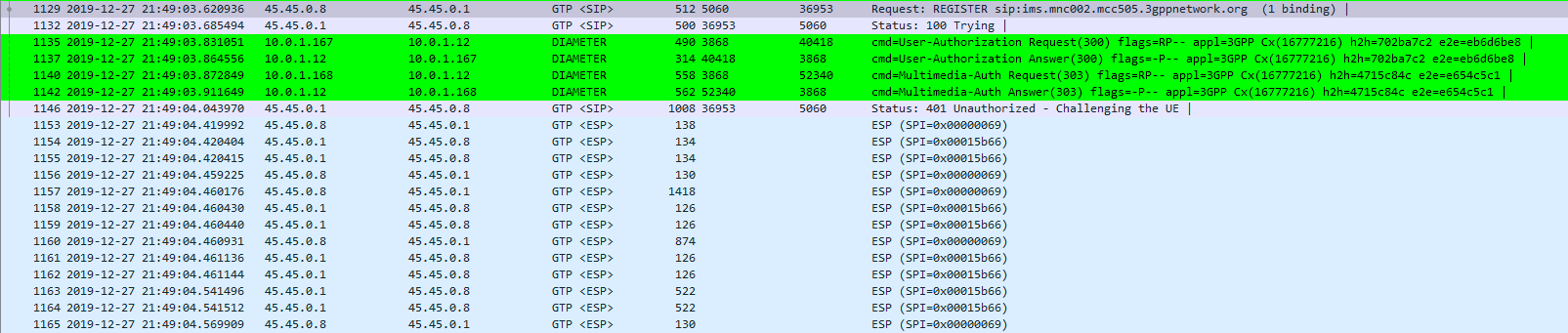

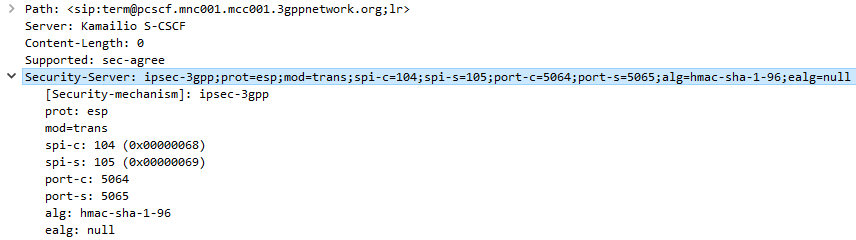

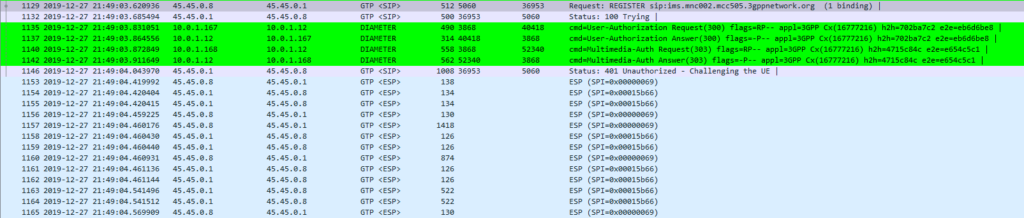

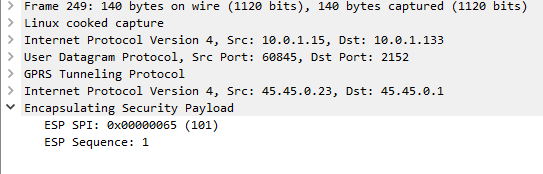

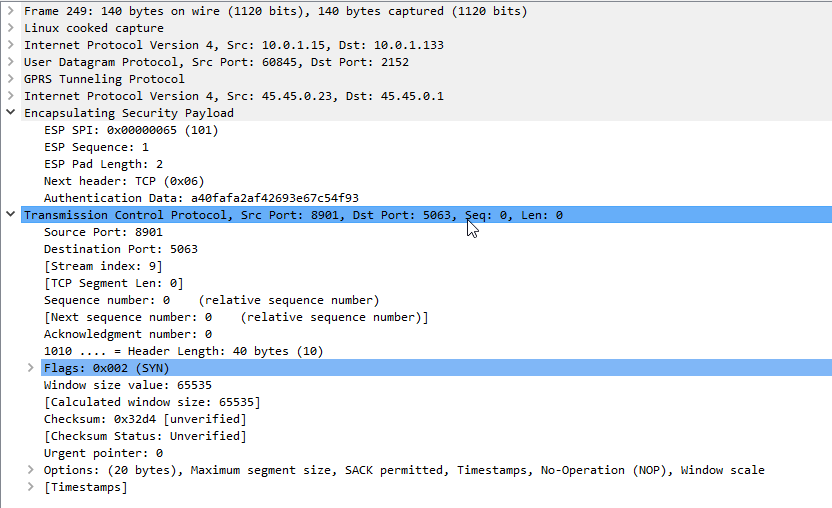

After sending back my 401 response the UE looks for the IPsec info in the 401 response, then tries to setup an IPsec SA and sends ESP packets back to the P-CSCF address.

Even with my valid AKAv1-MD5 auth, I found my UE wasn’t responding until I added IPsec support on the P-CSCF, hence why I couldn’t see the second REGISTER with the Authentication Info.

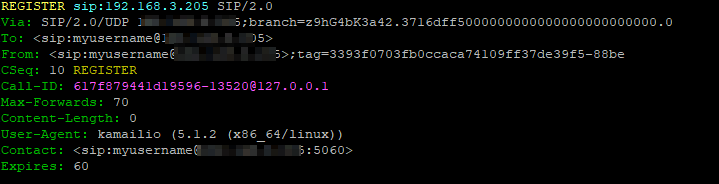

After setting up IPsec support, I finally saw the UE’s REGISTER with the AKAv1-MD5 authentication, and was able to send a 200 OK.

Get Good at Mind Reading (Or an Engineering Firmware)

To learn all these lessons took a long time,

One thing I worked out a bit late but would have been invaluable was cracking into the Engineering Debug options on the UEs I was testing with.

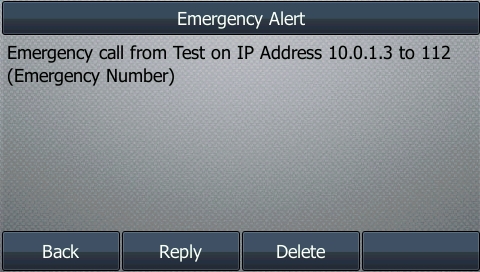

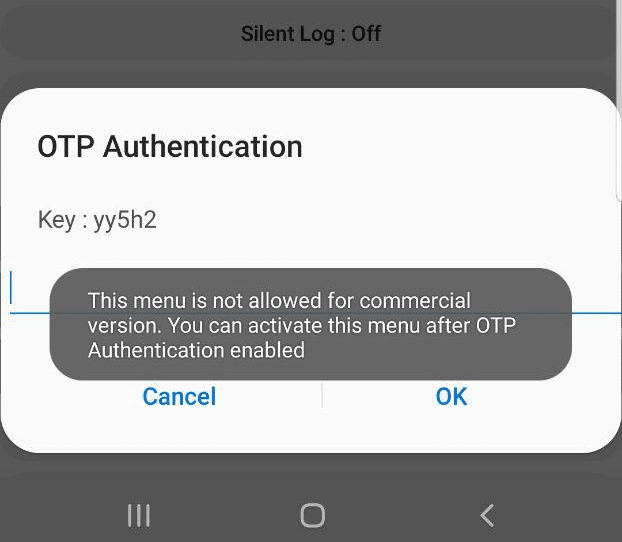

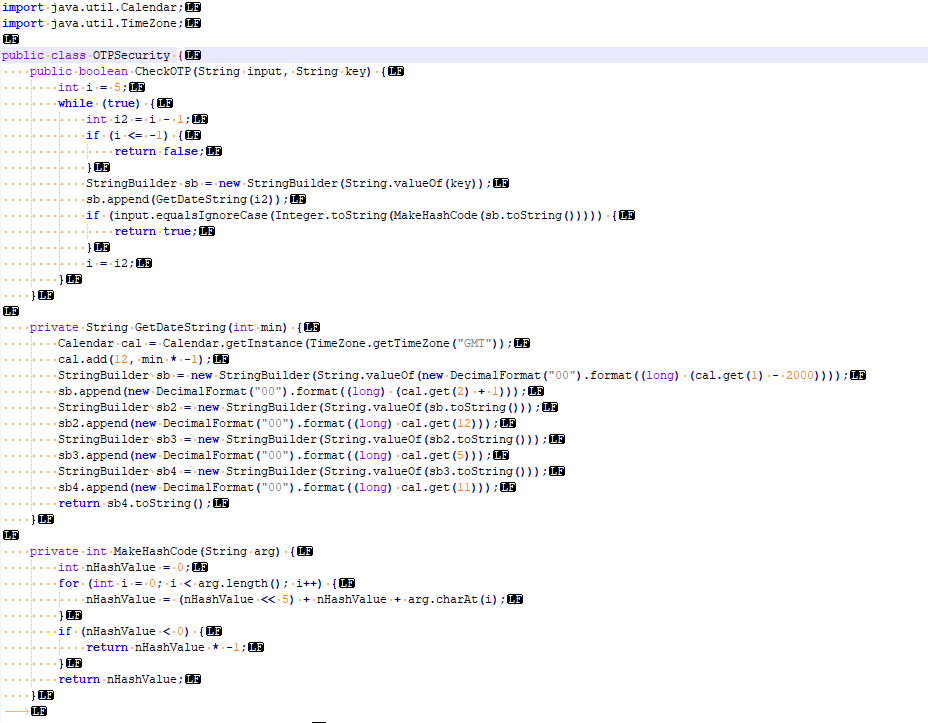

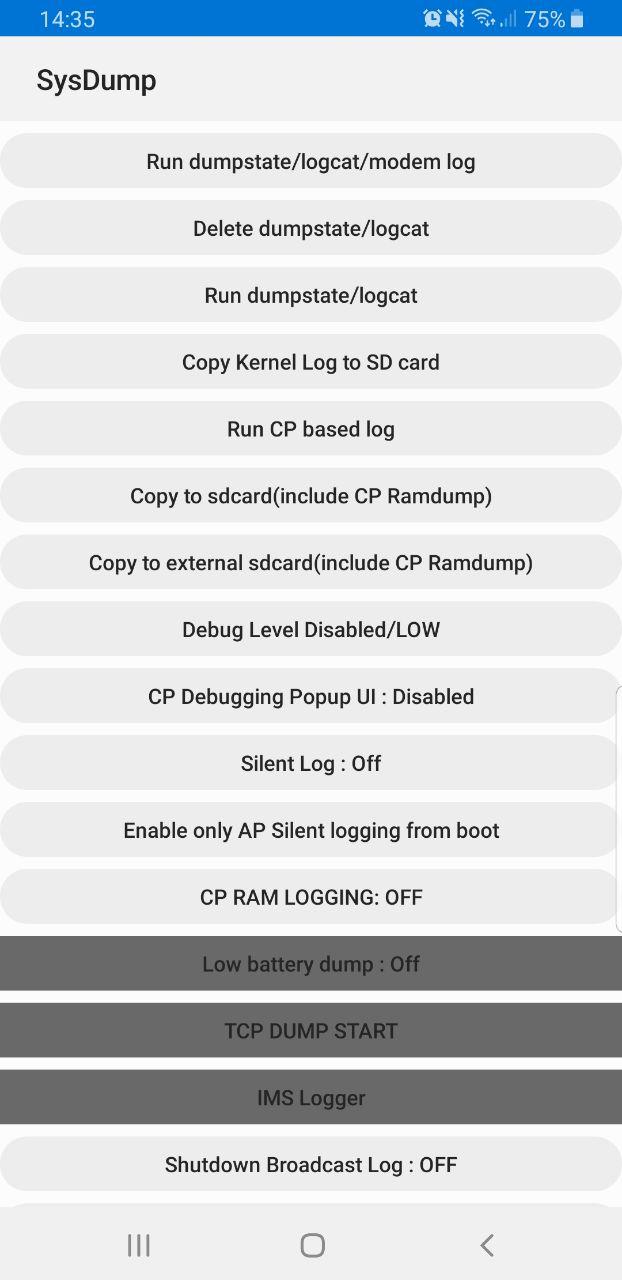

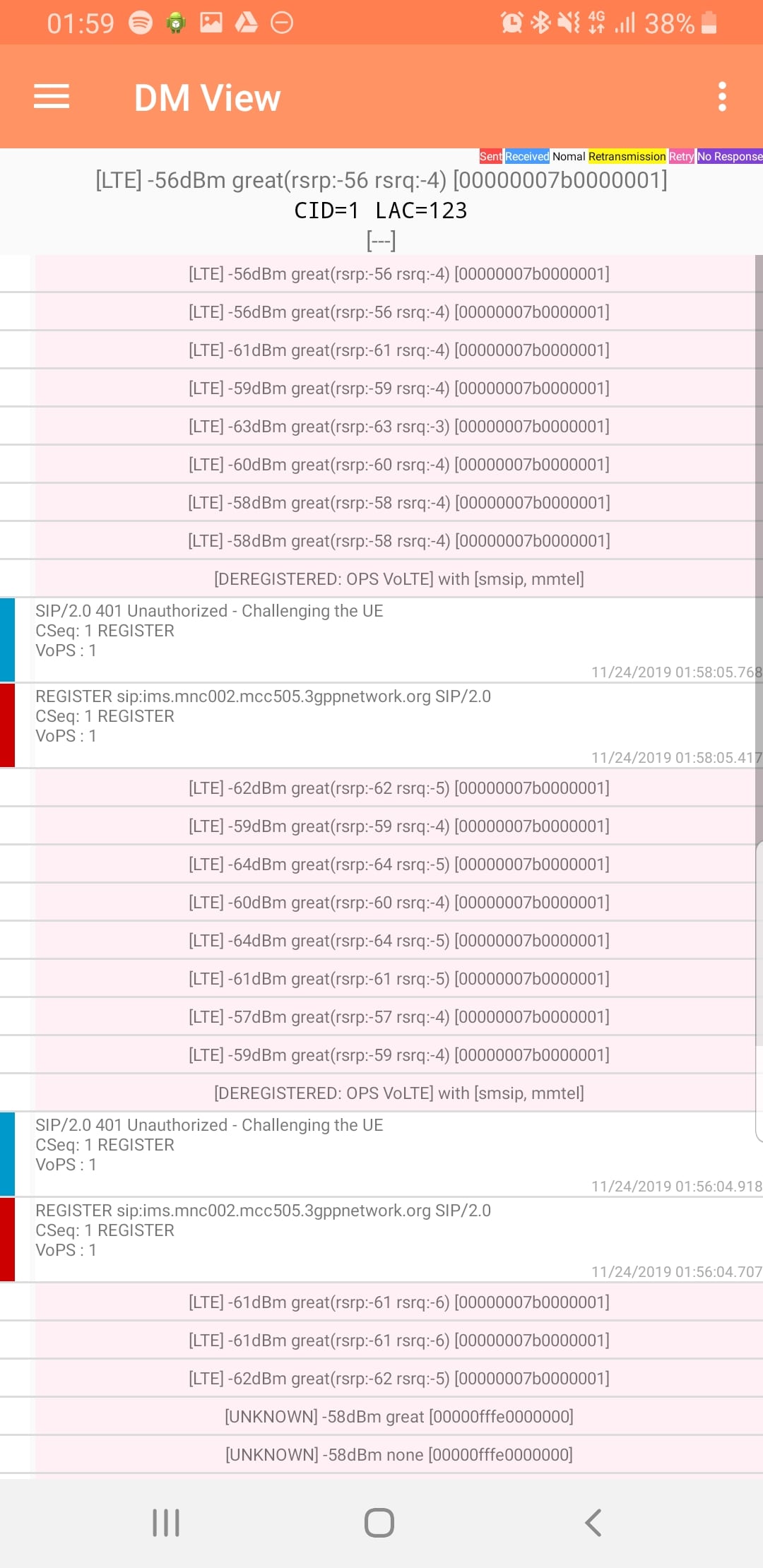

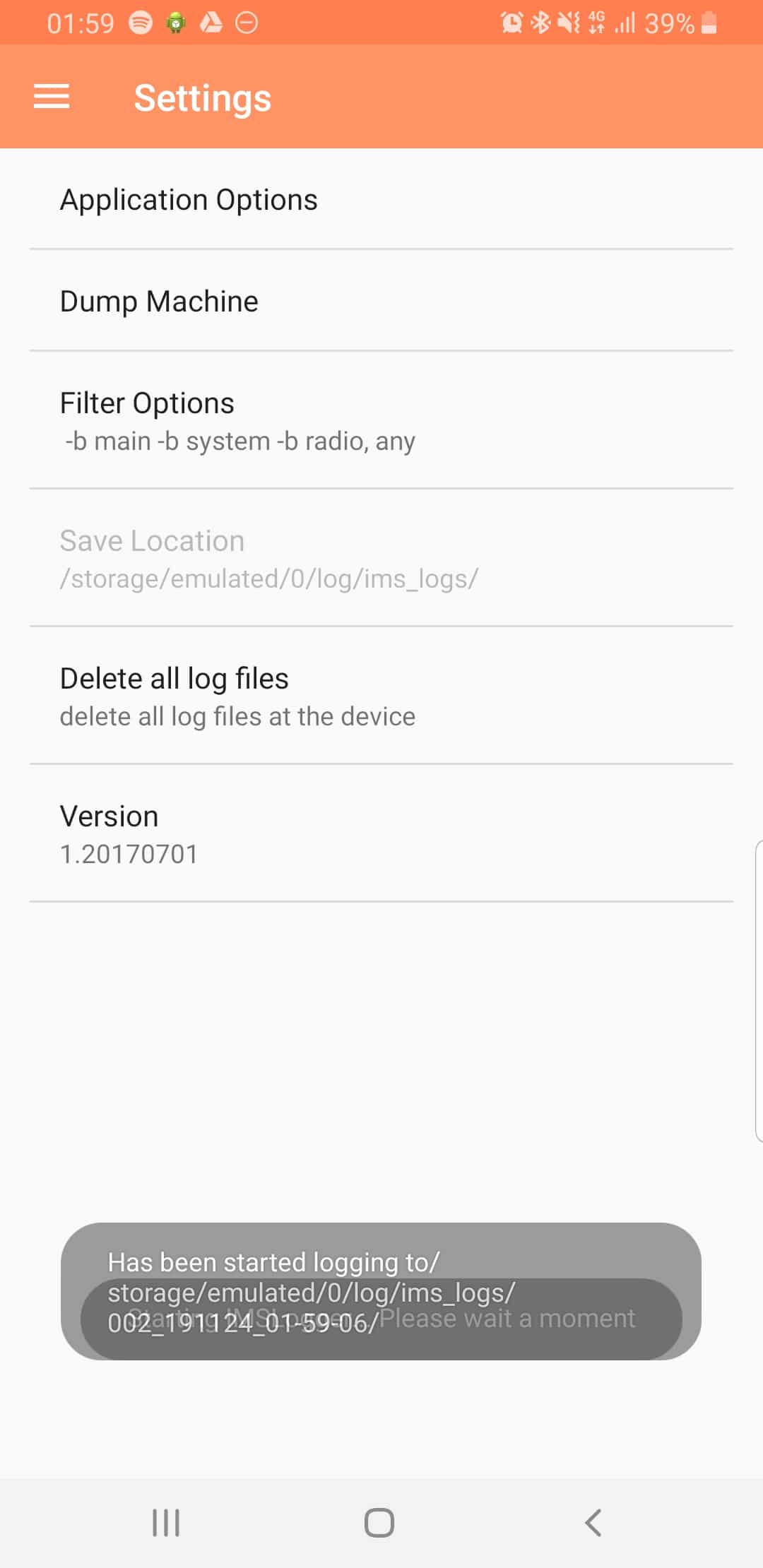

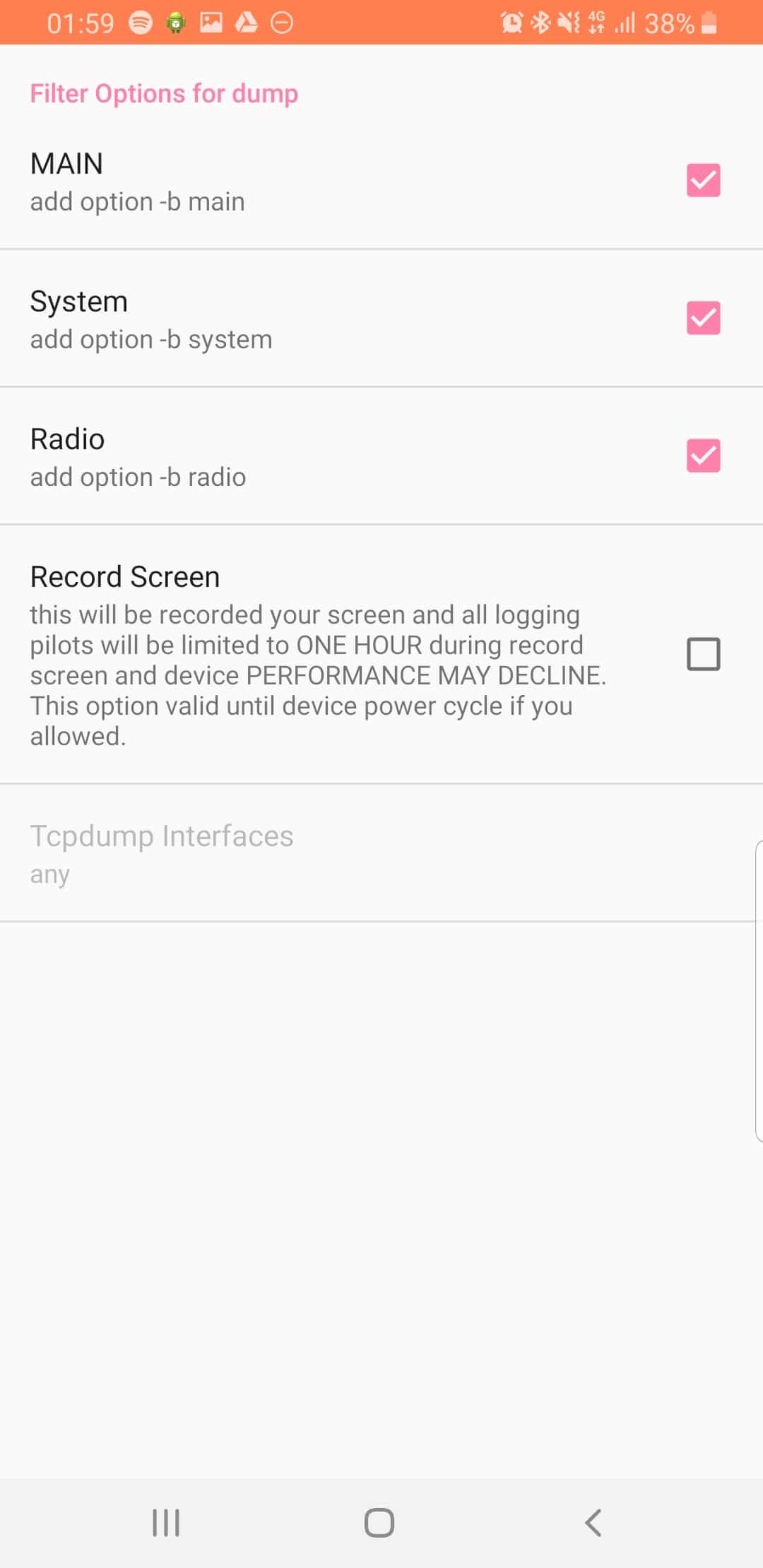

Samsung UEs feature a Sysdump utility that has an IMS Debugging tool, sadly it’s only their for carriers doing IMS interop testing.

After a bit of work I detailed in this post – Reverse Engineering Samsung Sysdump Utils to Unlock IMS Debug & TCPdump on Samsung Phones – I managed to create a One-Time-Password generator for this to generate valid Samsung OTP keys to unlock the IMS Debugging feature on these handsets.

I outlined turning on these features in this post.

This means without engineering firmware you’re able to pull a bunch of debugging info off the UE.

If you’ve recently gone through this, are going through this or thinking about it, I’d love to hear your experiences.

I’ll be continuing to share my adventures here and elsewhere to help others get their own VoLTE networks happening.

If you’re leaning about VoLTE & IMS networks, or building your own, I’d suggest checking out my other posts on the topic.