There’s often a lot of focus on the signalling side of VoIP, but the media RTP (Real Time Protocol) is the protocol that actually transfers the voice over IP.

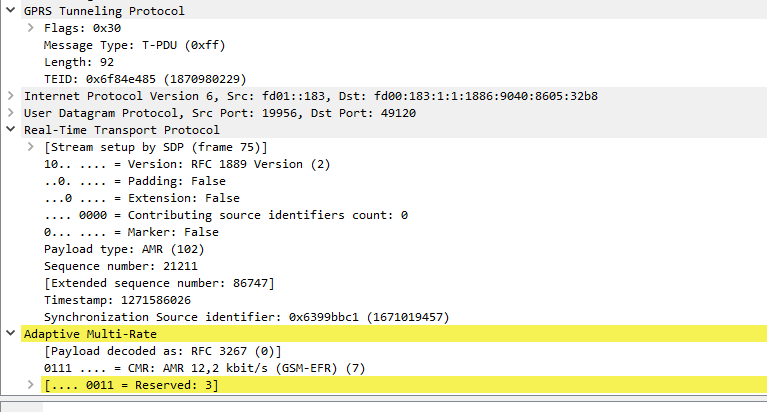

RTP is designed to be bare-bones and adaptable. A RTP packet doesn’t have pretty RFC822 style headers that are easy to read, but rather a fixed length formatted string of Hex values, with different positions denoting different values to keep the size down. There’s no checksum in the protocol, error correction, or anything else that might add overhead.

RTP is the transport of the media, it contains the media as a payload inside, but it’s up to the system creating the RTP packets as to what’s inside the payload. The header of an RTP packet does denote the payload type, but RTP has no way to verify that the contents of the payload match the payload type specified.

First defined in 1996, RTP hasn’t seen much evolution, primarily owing to it’s design being as lightweight and simple as possible. RTP had a bit of an update in 2003 under RFC 3550, but that only touched upon changes to the timer algorithm. (That deserves a post of it’s own) There have been pushes in the past for a further cut down RTP with fewer fields, as being fixed-width some fields when not used are just padded with 00000s so the packet size on the wire remains the same regardless.

RTP is generally carried over UDP but it will run over TCP. Running your RTP traffic over TCP can be pretty costly due time-sensitive nature and the sheer volume of packets you’ll be seeing. If if you’re packetizing a G. 711 a-law call (sampled every 20 ms at 8,000 Hz) that’s a packet every 160ms – 375 packets each direction per minute on UDP. If you were to use TCP to transport these packets you’d need to add the 3-way-handshake giving you 3 times as many packets at 1125 packets per minute, not to mention much more jitter and PDV caused by 3 times the load.

Header Fields

The data in RTP headers is in Hexidecimal format, which keeps it’s size down and processing minimal, but also means it’s pretty rigidly defined in terms of spacing etc, it’s not like a SIP header which might look like To: [email protected]\n\r, this wastes precious space on the wire to add the “To: ” and the “\n\r”, so instead it’s fixed positioning all the way with just the data.

If you haven’t had the joys of working with 90’s data files in fixed width formats, the premise is fairly simple; each value has a start and end position within a document. More info on creating RTP headers can be found in the post “Crafting RTP Packets”.

Generally when working with RTP packets on the wire, all these headers are joined one after another, broken up into blocks of 8 (octets) and then converted to HEX, all to ensure it’s as small as practical when it’s transmitted.

Version (2 bits)

RTP has had two published versions, but in both the value to put in this field is 10 (Binary 2). If you are reading this on a machine that isn’t running DOS, there’s a good chance you’ll only see version 2.

If your traffic routed through a wormhole, or your network has some serious latency issues (Several decades) you could find yourself working with a media stream pre-1996 (hopefully not) using the draft version of RTP and has a value of 01 (Binary 1). But if you were dealing with RTP’s predecessor; vat, this value would be 0. (vat and RTP aren’t the same).

Padding (1 bit)

If you’re encrypting your packets you may need them to be a specific size, and for this you may need to pad the packets out at the end. To do this you’d enable padding by putting a 1 here and then specifying at the end of the payload how many octets of padding you need. In most cases this isn’t used though, and this value will be 0.

Extension (1 bit)

Unlike a lot of RFC documents that specify “must” “shall” etc, RTP was defined more as a guideline, a template for implementers. The extension field was added to allow individual implementations with additional custom data in the headers, while being ignored by other network elements that don’t support the extensions. If this is enabled it’s followed by 16 bits of you-decide.

However like the padding value, this is likely to be 0.

Contributing Source (CSRC) Count (4 bits)

RTP allows you to have multiple Contributing Sources. This means on a 3-way call, instead of your switch taking the two audio streams, joining them together (mux) and sending each endpoint a single media stream, you could have direct-media from one of the parties you’re on a 3 way call with, and the other party you’re on a call with added as a Contributing Source.

Again, it’s likely this is 0000.

Marker (1 bit)

If the marker bit is set or not is actually up to the underlying protocol. In video the marker bit is often used to signify the image has significantly changed, and in audio it’s generally to denote the end of silence & the start of talking – called a “talkspurt”.

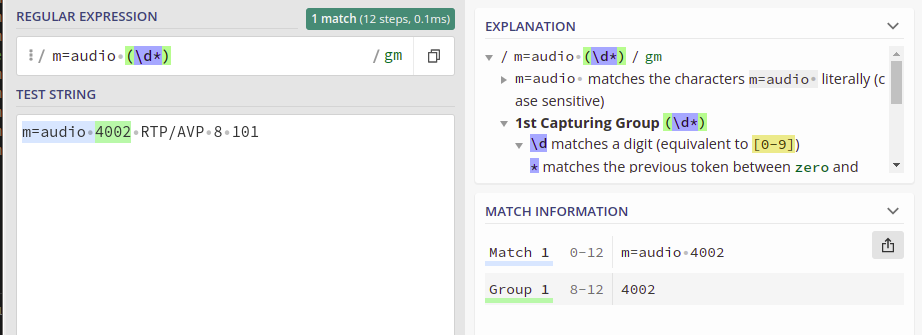

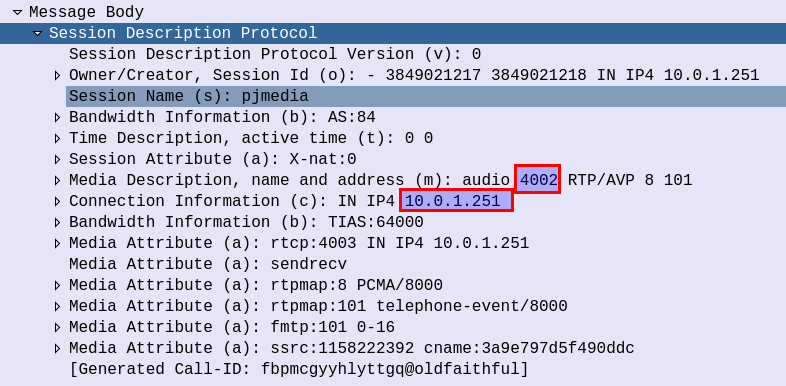

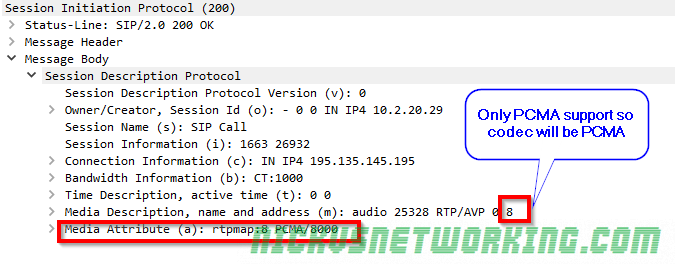

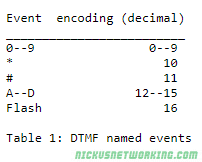

Payload Type (7 bits)

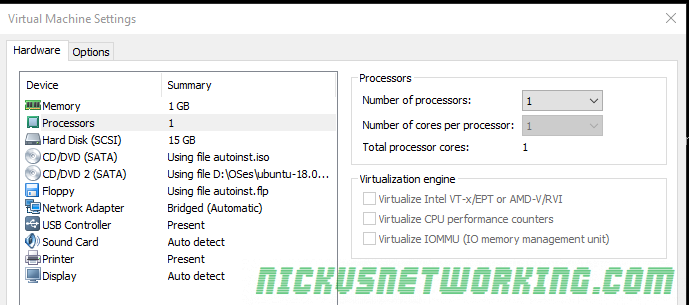

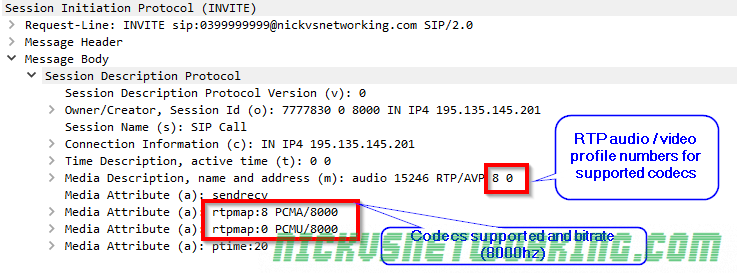

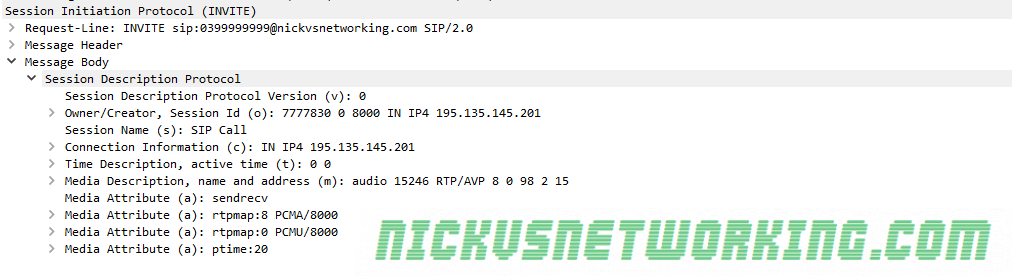

The payload type is what specifies the contents of the payload. In voice terms this means the codec we’re using. RFC3551 defines some predefined payload type definitions and it’s Payload Type code.

Your values might not appear in the RFC3551 definitions if you’re using a non-standard codec, and that’s Ok. RTP could be used to play video games or pilot an RC plane, it’s really just a protocol to carry a stream of real time data quickly from point A to point B with as little overhead as possible.

PCMA / PCMU is king here thanks to it G.711’s widespread adoption due to being the codec used in TDM, and the fact you don’t need to transcode PCM to bring the traffic into the network, or compress it from a TDM source. TDM / circuit switched services are way less common on the network edge these days, but G.711 still holds on as the defacto standard.

So for a G711 a-law (PCMA) payload this value would be 8, which is 0001000 in Binary (it’s also equivalent to 1000 in binary but we need to fill all 7 bits because we’re using fixed-width formatting, so we prefix it with zeros, if we were using GSM, which is 8 in decimal and 11 in binary, we’d format is 0000011)

For the full list of Payload types check out IANA’s Real-Time Transport Protocol (RTP) Parameters.

Sequence Number (16 bits)

The sequence number is a supposedly random number that increments by 1 for each packet sent.

This allows the receiving party to calculate packet loss, because if you receive packets with the sequence number 1,2,3,5,6 you know you’ve missed packet 4.

It also allows us to calculate our packet delay variation (PDV), and helps our jitterbuffer re-assemble packets, if we receive packets 1,3,2,4,5,6 we can see they’re out of sequence and know to play them back in the order 1,2,3,4,5,6, not the order we received them.

The sequence numbers are supposed to be random. By having this as a random number it adds an extra unknown part of the packet for someone trying to break any crypto on top to guess. Polycom however just start all theirs at 0. (Slow clap)

This is a 16 bit number, so like the payload type we’ll have to convert it from decimal to binary, then pad it to be 16 bits. So if our starting sequence number is 1234 we’d have to convert it to binary (10011010010) and then pad it to 16 characters (0000010011010010)

Timestamp (32 bits)

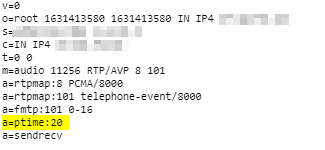

The timestamp, like the sequence number is supposed to begin with a random number, and then increased by the sampling instances between packets “monotonically and linearly in time”. In essence it means a random starting number + time between packets.

The value increments by the packetization time (ptime) in seconds x bandwidth in Hz.

So for a call with a ptime of 20ms at 8Khz this would be:

0.020 x 8000 = 160, so increment by 160 each packet.

Having an accurate source for the timestamp allows accurate stats to be generated for PDV, jitter etc, without this value being accurate your RTCP values will always appear off, even if the audio is fine.

If we wanted a timestamp of 837026880, we’d need to convert it to Binary (110001111001000000010001000000) and pad it to ensure it’s 32 bits long (this value already is so no need to pad).

SSRC (Synchronization Source Identifier) (32 bits)

The SSRC is like a Call-ID, a unique value that identifies one RTP stream from another. If you had two packets to the same port, from the same IP, with roughly the same sequence number & timestamp you’d need a way to determine which RTP stream is for which session. This is where the SSRC comes in, a unique identifier that identifies one RTP stream from the others.

To keep it random the spec’ even suggests ways to generate a random value based on other values.

If we wanted to use 185755418 as or SSRC we’d need to convert it to Binary (1011000100100110011100011010) and pad it to 32 bits (00001011000100100110011100011010)

CSRC List (Contributing source list) (0 to 15 individual 32 bit values)

This contains a list of the contributing sources. Depending on how many sources were specified in your CSRC Count, this can have any number of items from 0 to 15. So if you had one contributing source in the CSRC Count, then you’d have 1x 32 bit value to specify the details of the SSRC identifiers of the sources.

This will always not exist if the CSRC Count is 0.

Payload

The payload is whatever you want it to be, so long as you specify it in the Payload Type field, and pad it if enabled, any data can be put here.

Further Reading

I got a copy of the Colin Perkin’s book RTP: Audio and Video for the Internet, which covers everything you need to ever know about RTP. I’d highly recommend it. It was written in 2003, is still just as relevant as more and more traffic moves off circuit switched into packet switched.