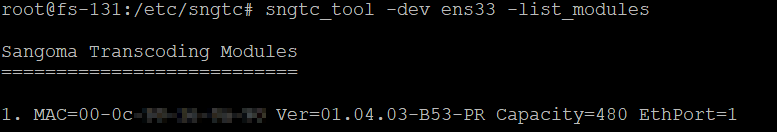

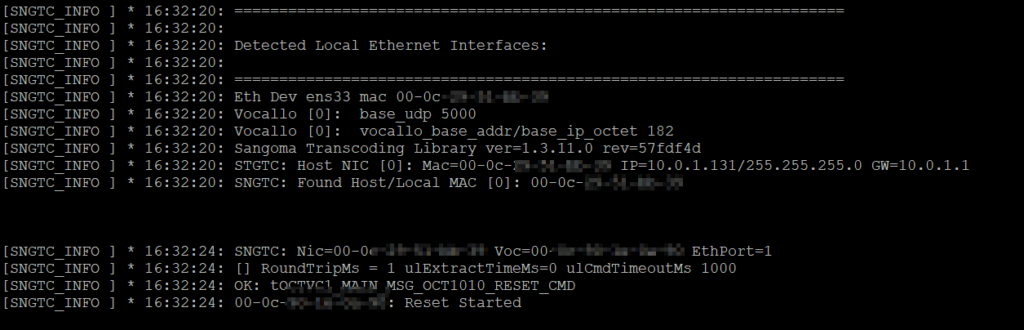

We’re doing more and more network automation, and something that came up as valuable to us would be to have all the IPs in HOMER SIP Capture come up as the hostnames of the VM running the service.

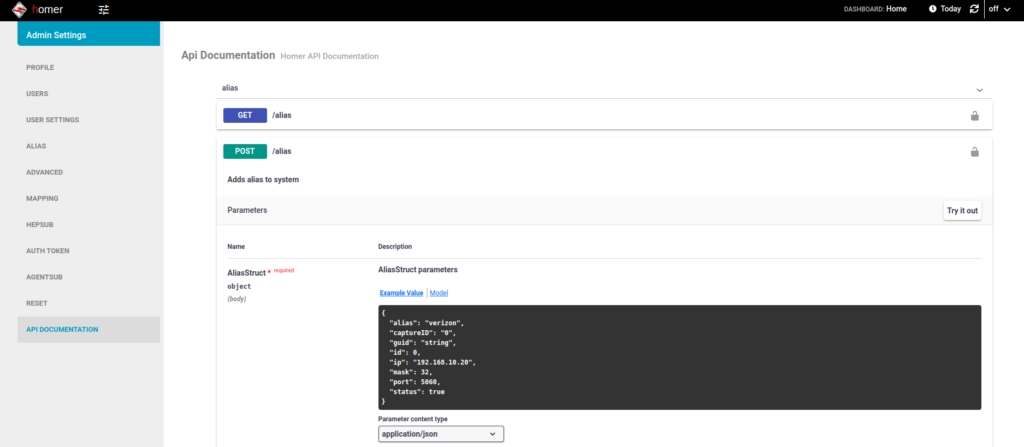

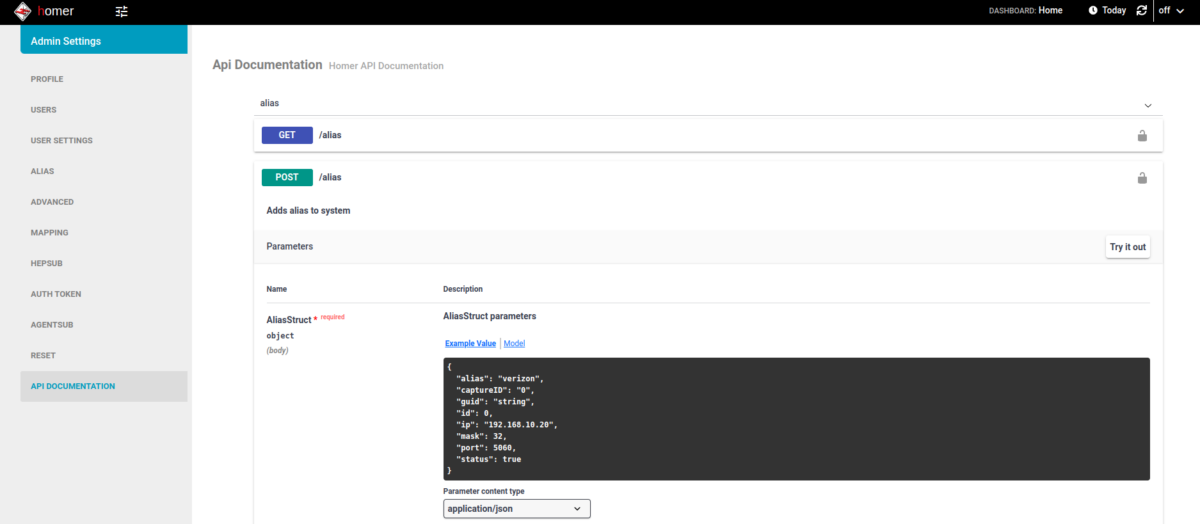

Luckily for us HOMER has an API for this ready to roll, and best of all, it’s Swagger based and easily documented (awesome!).

(Probably through my own failure to properly RTFM) I was struggling to work out the correct (current) way to Authenticate against the API service using a username and password.

Because the HOMER team are awesome however, the web UI for HOMER, is just an API client.

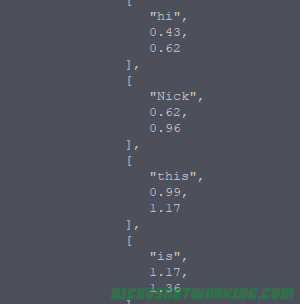

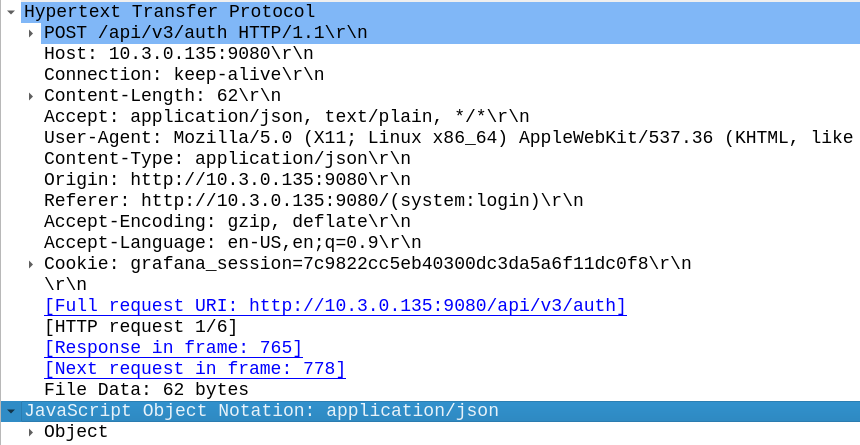

This means to look at how to log into the API, I just needed to fire up Wireshark, log into the Web UI via my browser and then flick through the packets for a real world example of how to do this.

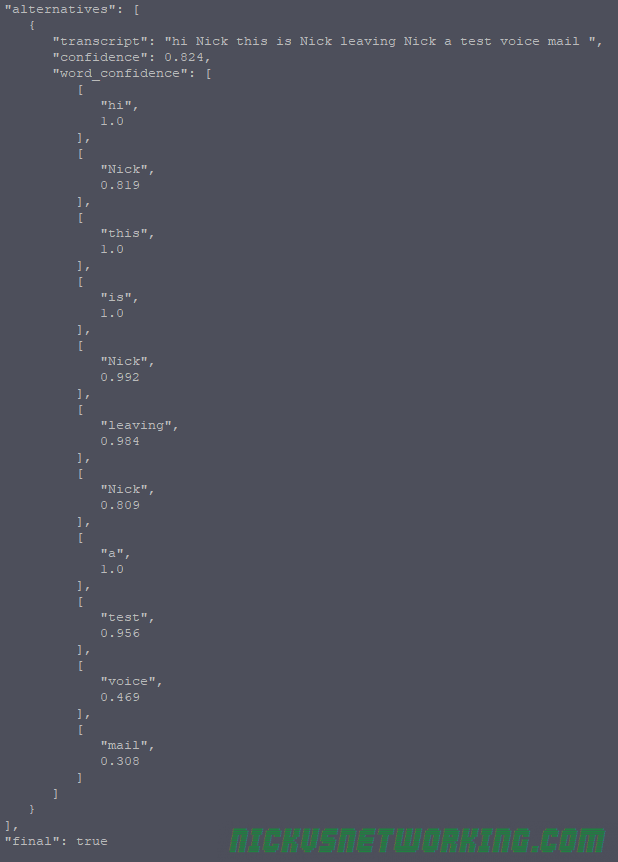

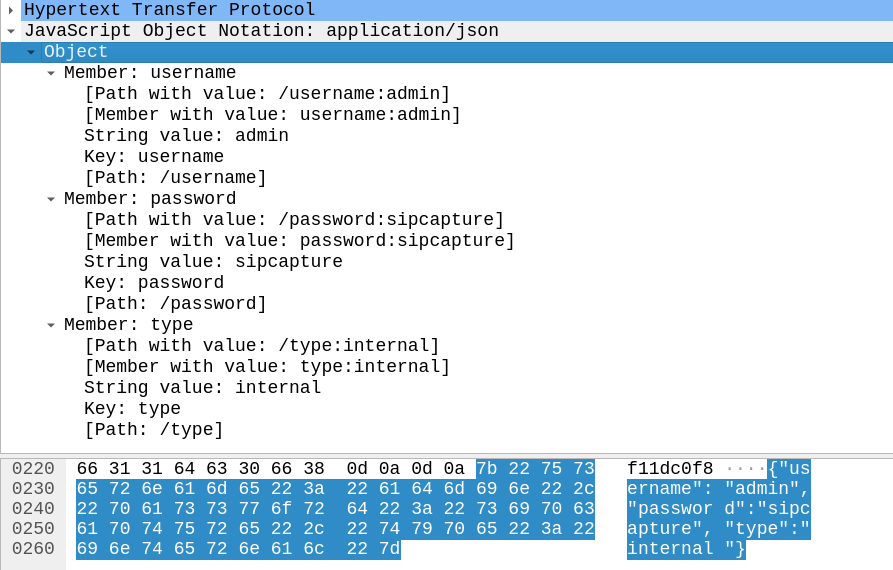

In the Login action I could see the browser posts a JSON body with the username and password to /api/v3/auth

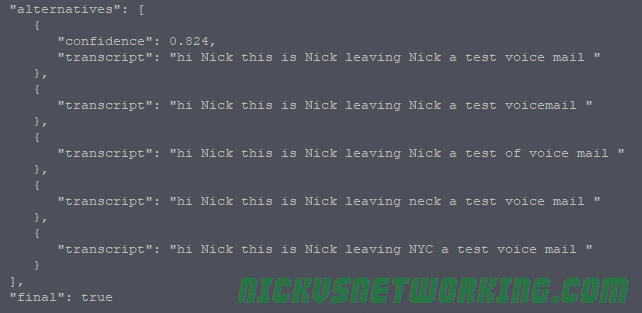

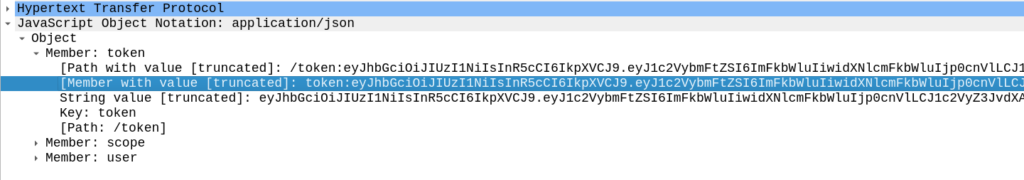

{"username":"admin","password":"sipcapture","type":"internal"}And in return the Homer API Server responds with a 201 Created an a auth token back:

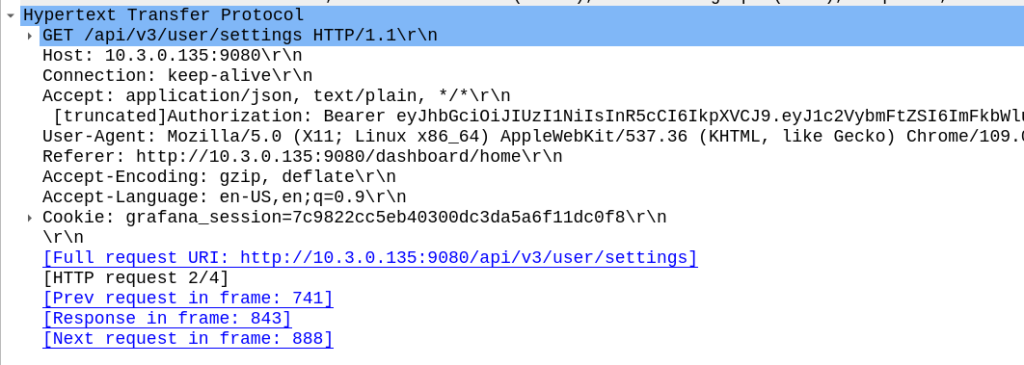

Now in order to use the API we just need to include that token in our Authorization: header then we can hit all the API endpoints we want!

For me, the goal we were setting out to achieve was to setup the aliases from our automatically populated list of hosts. So using the info above I setup a simple Python script with Requests to achieve this:

import requests

s = requests.Session()

#Login and get Token

url = 'http://homer:9080/api/v3/auth'

json_data = {"username":"admin","password":"sipcapture"}

x = s.post(url, json = json_data)

print(x.content)

token = x.json()['token']

print("Token is: " + str(token))

#Add new Alias

alias_json = {

"alias": "Blog Example",

"captureID": "0",

"id": 0,

"ip": "1.2.3.4",

"mask": 32,

"port": 5060,

"status": True

}

x = s.post('http://homer:9080/api/v3/alias', json = alias_json, headers={'Authorization': 'Bearer ' + token})

print(x.status_code)

print(x.content)

#Print all Aliases

x = s.get('http://homer:9080/api/v3/alias', headers={'Authorization': 'Bearer ' + token})

print(x.json())

And bingo we’re done, a new alias defined.

We wrapped this up in a for loop for each of the hosts / subnets we use and hooked it into our build system and away we go!

With the Homer API the world is your oyster in terms of functionality, all the features of the Web UI are exposed on the API as the Web UI just uses the API (something I wish was more common!).

Using the Swagger based API docs you can see examples of how to achieve everything you need to, and if you ever get stuck, just fire up Wireshark and do it in the Homer WebUI for an example of how the bodies should look.

Thanks to the Homer team at QXIP for making such a great product!