For the past few years I’ve run a Dell R630 as one of our labs / testing, it’s hosted down the road from me, and with 32 cores and 256 GB of RAM, it’s got enough grunt to run what we need for testing stuff on the East coast and messing around. We’ve got a proper DC with compute in Sydney and Perth, but for breaking stuff, I wanted my own lab.

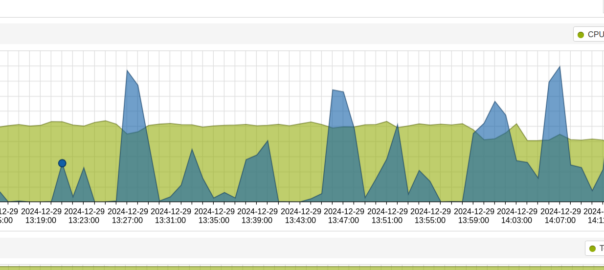

This box started on VMware but after I’d see really odd disk IO behavior over a long period of time I couldn’t get to the bottom of.

Things would hang, for example you’d go to edit a file on a VM in vi and have to wait 20 seconds for the file to open, I could cat the same file instantly, and other files I could vi instantly.

I initially thought it was that dreaded issue with Ubuntu boxes being unable to revolve their own hostname and waiting for DNS to time out every. single. time. it. did. anything, but I ruled that out when I got the same behavior with live CDs and non Linux OSes.

In the end I narrowed it down to being related to Disk IO.

I read Matt Liebowitz book on VMware VSphere Performance, assuming there was a setting somewhere inside VMware I had wrong.

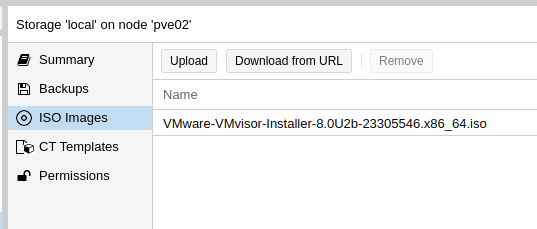

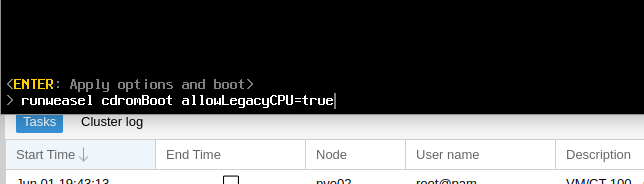

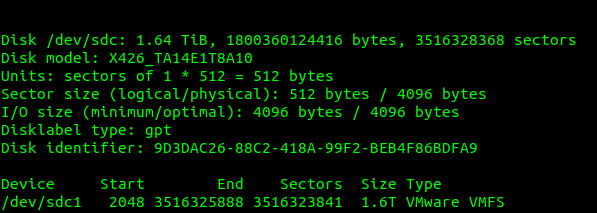

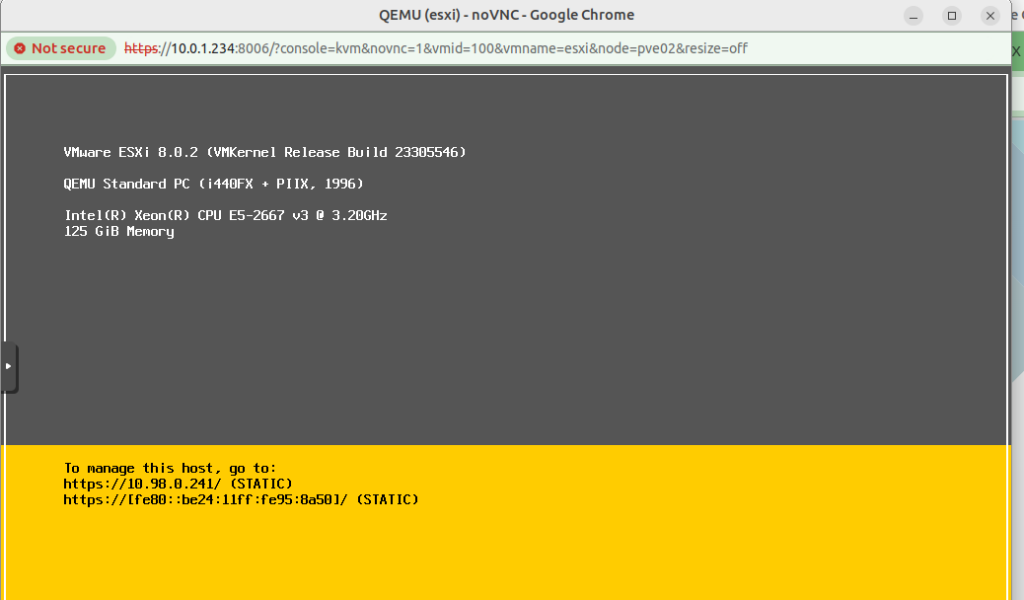

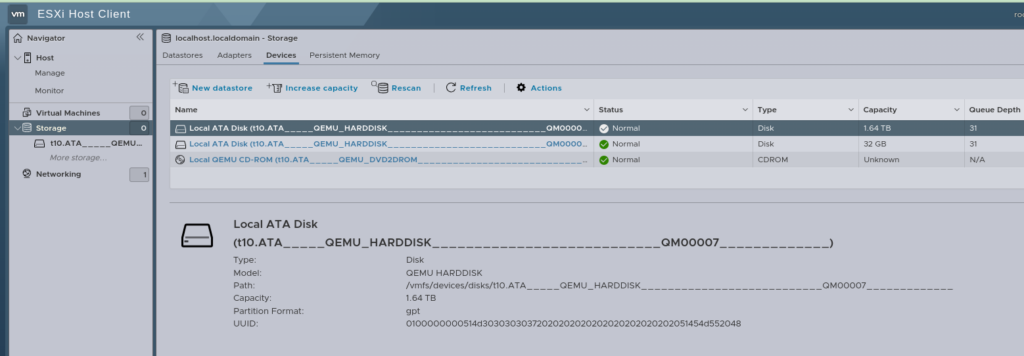

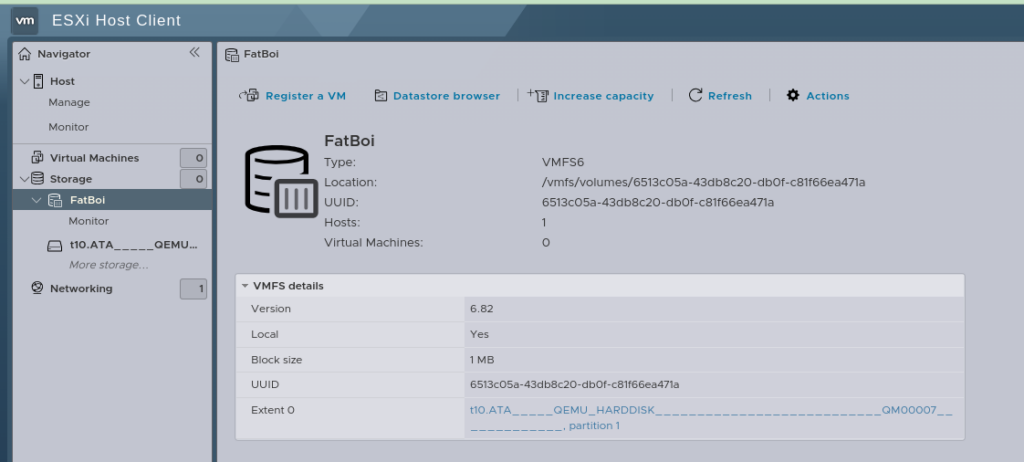

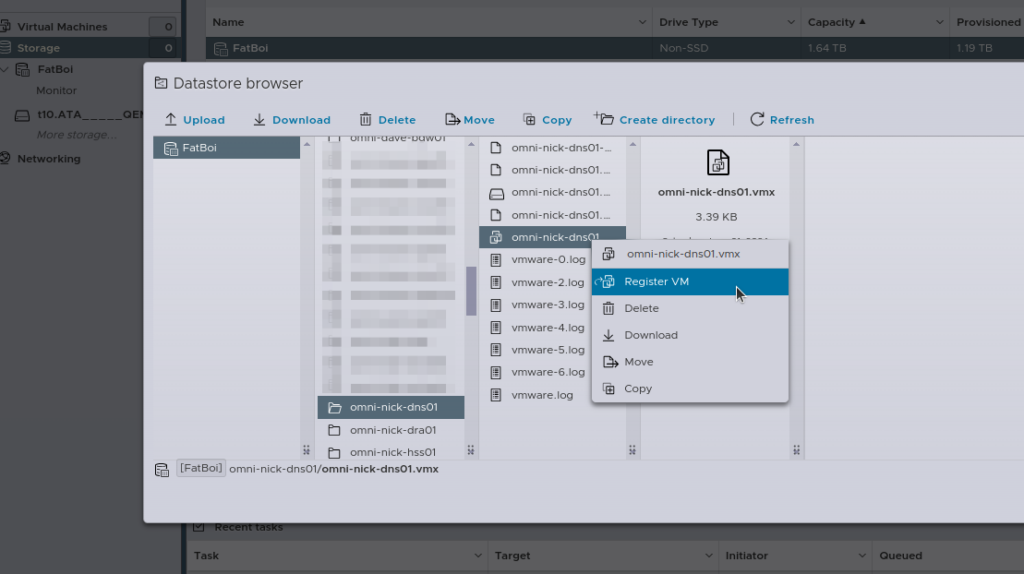

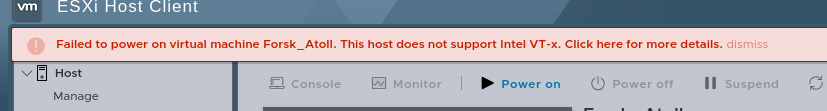

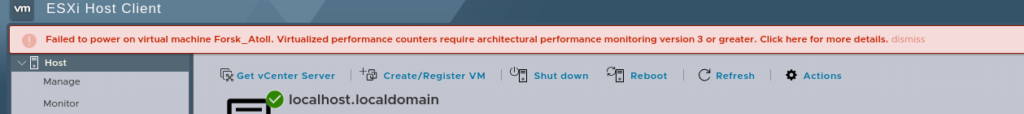

Around the same time all the unpleasantness was going down with VMware and licencing changes, and so I moved to Proxmox (while keeping a virtualized copy of VMware running inside Proxmox).

But switching hypervisors didn’t fix the issue, so I could rule that out.

So I splashed out and swapped the 16k magnetic SAS drives in the RAID with new SSDs, but still the problem persisted – It wasn’t the drives and I wasn’t seeing a marked increase in performance.

I did a bunch of turning on the PERC card with disk caching, write ahead, etc, but still the problem persisted.

At this stage I was looking at the PERC card or (less likely) the CPU/motherboard/RAM combo.

So over a quiet period, I moved some workloads back onto one of the old 16k magnetic SAS drives that I had pulled out to replace with the SSDs, and benchmarked the disk performance on the standalone SAS drive to compare against the RAID SSD performance.

I used iozone3 to benchmark the performance with:

iozone -t1 -i0 -i2 -r1k -s1g /tmp

Here’s how the SSDs in the RAID two compare to a standalone SAS drive (not in RAID):

| Metric | LXC on SSD RAID | Standalone SAS Drive | Difference (Standalone vs. RAID) |

|---|---|---|---|

| Initial Write (Child) | 314,682.91 kB/sec | 382,771.88 kB/sec | +21.6% |

| Initial Write (Parent) | 177,522.16 kB/sec | 119,112.43 kB/sec | -32.9% |

| Rewrite (Child) | 428,456.94 kB/sec | 470,486.44 kB/sec | +9.8% |

| Rewrite (Parent) | 180,007.46 kB/sec | 73,721.11 kB/sec | -59.0% |

| Random Read (Child) | 404,707.62 kB/sec | 406,057.00 kB/sec | +0.3% |

| Random Read (Parent) | 404,410.90 kB/sec | 397,718.31 kB/sec | -1.7% |

| Random Write (Child) | 126,042.59 kB/sec | 355,304.22 kB/sec | +181.9% |

| Random Write (Parent) | 4,497.75 kB/sec | 68,971.35 kB/sec | +1,434% |

That Random Write (Parent) at the bottom – Yeah that would explain the “weird” behavior I’ve been seeing on guest OSes.

As part of editing a file with vi it creates a lock file, that would be written to a random sector, and thus taking such a long time (while cat wouldn’t do the same).

Okay – So now I know it’s the PERC at fault or the RAID config on it.

Next I put another SSD, the same type as those in the RAID, but as a standalone drive (Not in the RAID) and here’s the results:

| Metric | RAID-15 SSD | SSD Standalone | Difference (WD vs. RAID-5) |

|---|---|---|---|

| Sequential Writes (Child) | 314,682.91 kB/sec | 511,280.50 kB/sec | +62.5% |

| Sequential Writes (Parent) | 177,522.16 kB/sec | 128,016.83 kB/sec | -27.9% |

| Sequential Rewrites (Child) | 428,456.94 kB/sec | 467,547.38 kB/sec | +9.1% |

| Sequential Rewrites (Parent) | 180,007.46 kB/sec | 79,698.26 kB/sec | -55.7% |

| Random Reads (Child) | 404,707.62 kB/sec | 439,705.72 kB/sec | +8.6% |

| Random Reads (Parent) | 404,410.90 kB/sec | 437,549.83 kB/sec | +8.2% |

| Random Writes (Child) | 126,042.59 kB/sec | 319,127.09 kB/sec | +153.2% |

| Random Writes (Parent) | 4,497.75 kB/sec | 125,458.00 kB/sec | +2,689.3% (!) |

So sequential write and rewrites were slightly down on the standalone disk, but the other figures all look way better on the standalone SSD.

So that’s my problem, I figure it’s something to do with how the RAID is configured but after messing around for a few hours with all the permutations of settings I tried, I couldn’t get these figures to markedly improve.

As this is a lab box I’ll just dismantle the RAID and run each LXC container / VM on a local (non-RAID) SSD, as data loss from a dying disk is not a concern in my use case, but hopefully this might be of use to someone else seeing the same.