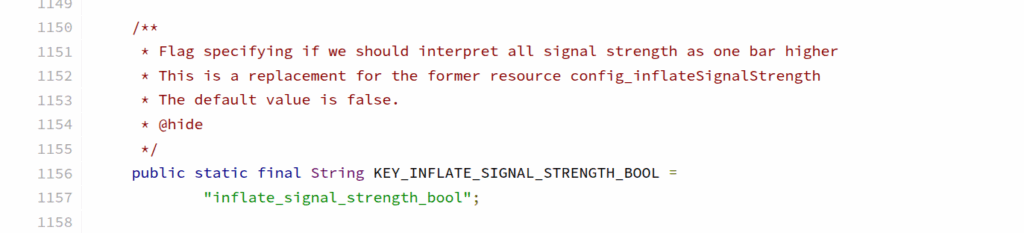

Poking around in Android the other day I found this nugget in Carrier Config manager; a flag (KEY_INFLATE_SIGNAL_STRENGTH_BOOL) to always report the signal strength to the user as one bar higher than it really is.

It’s not documented in the Android docs, but it’s there in the source available for any operator to use.

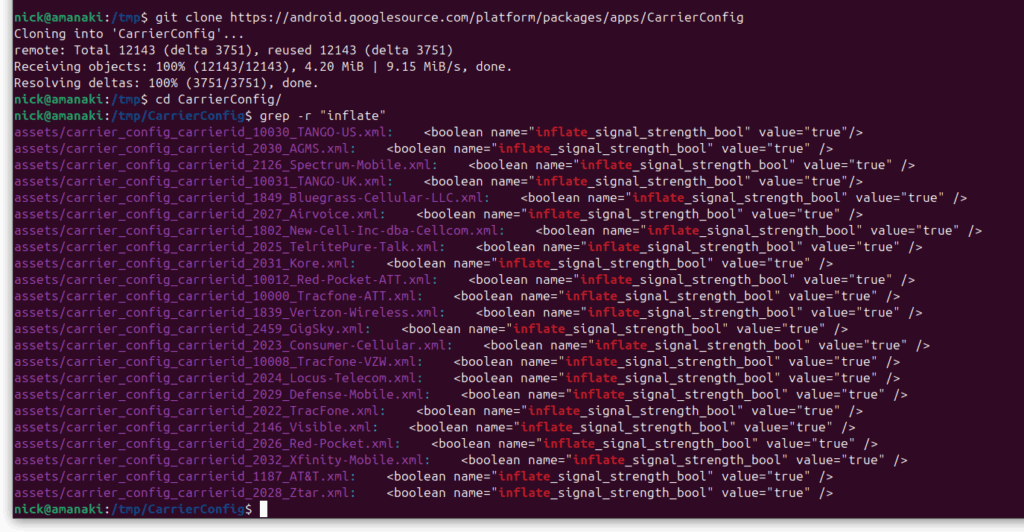

Notably both AT&T and Verizon have this flag enabled on their networks, I’m not sure who was responsible for requesting this to be added to Android, nor could I find it in the git-blame, but we can see it in the CarrierConfig which contains all the network settings for each of these operators.

Operators are always claiming to have the biggest coverage or the best network, but stuff like this, along with the fake 5G flags, don’t help build trust, especially considering the magic mobile phone antennas which negate the need for all this deception anyway.

Waiting for the

KEY_FIXED_NETWORK_SIGNAL_STRENGTH_INT

Why stop at inflating by 1 when you can just fix it to always show the highest reception.

User must just be holding it the wrong way should throughput not reflect the 5 bars network indicator…

This is all hearsay, but there were news articles to back some of this up.

When 4G came out, equivalent throughput and data loss was possible on a smaller amount of signal.

For the majority of users, they saw fewer 4G bars yet experienced a higher signal quality and throughput. This resulted in complaints about signal quality that were not founded on actual call quality but rather on perception from number of bars.

The answer to this was to inflate the “bars” to match perceived performance. 1 bar of 4G was a reliable signal compared to 1 bar of 3G.

It’s probably not needed any more but the carrier removed the extra bar, users who switched service would blame the carrier. If a certain OS update removed this bonus bar, everyone would blame android. If Samsung did it but not Pixel, you’d see Samsung complaints about poor signal.

Really interesting insight, thanks. Was this the US 3G (CDMA?)?

I know for 2G for example, the uplink power was limited to 2 watts, while for LTE and above it’s 500 miliwatts (0.25x), and that had some real world consequences, I wonder if this is the same?

If that is correct, the right way to address it would have been for the OS to use a different way to calculate the intensity of the signal in 4G. Not the sloppy and prone to abuse “add me one bar because I say so”. This is deeply amateurish.